An Analysis of The Loss Functions in Keras

Welcome to my friendly, non-rigorous analysis of the computer vision tutorials in keras. Keras is popular high-level API machine learning framework in python that was created by Google. Since I'm now working for CometML that has an integration with keras, I thought it was time to check keras out. This post is actually part of a three article series. My friend Yujian Tang and I are going to tag-team exploring keras together. Glad you're able to come on this journey with us.

This particular article is about the loss functions available in keras. To quickly define a loss function (sometimes called an error function), it is a measure of the difference between the actual values and the estimated values in your model. ML models use loss functions to help choose the model that is creating the best model fit for a given set of data (actual values are the most like the estimated values). The most well-known loss function would probably be the Mean Squared Error that we use in linear regression (MSE is used for many other applications, but linear regression is where most first see this function. It is also common to see RSME, that's just the square root of the MSE).

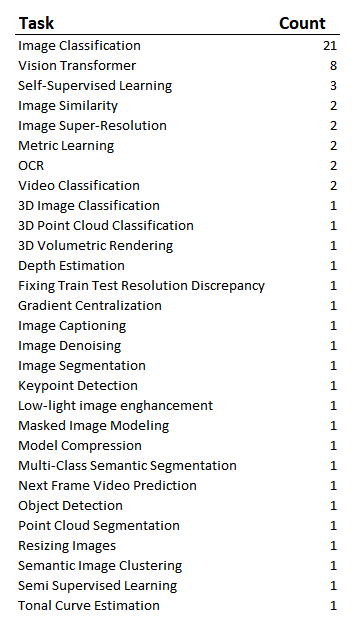

Here we're going to cover what loss functions were used to solve different problems in the keras computer vision tutorials. There were 68 computer vision examples, and 63 used loss functions (not all of the tutorials were for models). I was interested to see what types of problems were solved and which particular algorithms were used with the different loss functions. I decided that aggregating this data would give me a rough idea about what loss functions were commonly being used to solve the different problems. Although I'm well versed in certain machine learning algorithms for building models with structured data, I'm much newer to computer vision, so exploring the computer vision tutorials is interesting to me.

Things that I'm hoping to understand when it comes to the different loss functions available in keras:

Are they all being used?

Which functions are the real work horses?

Is it similar to what I've been using for structured data?

Before we get started, if you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I used: Python Course.

Let's start with the available loss functions. In keras, your options are:

The Different Groups of Keras Loss Functions

The losses are grouped into Probabilistic, Regression and Hinge. You're also able to define a custom loss function in keras and 9 of the 63 modeling examples in the tutorial had custom losses. We'll take a quick look at the custom losses as well. The difference between the different types of losses:

Probabilistic Losses - Will be used on classification problems where the ouput is between 0 and 1.

Regression Losses - When our predictions are going to be continuous.

Hinge Losses - Another set of losses for classification problems, but commonly used in support vector machines. Distance from the classification boundary is taken into account and you're penalized if the distance is not large enough.

This exercise was also a fantastic way to see the different types of applications of computer vision. Many of the tutorials I hadn't thought about that particular application. Hopefully it's eye-opening for you as well and you don't even have to go through the exercise of looking at each tutorial!

To have an understanding of the types of problems that were being solved in the tutorials, here's a rough list:

Image Classification Loss Functions

So of course, since Image classification was the most frequent type of problem in the tutorial, we're expecting to see many probabilistic losses. But which ones were they? The most obvious question is then "which loss functions are being used in those image classification problems?"

We see that the sparse categorical crossentropy loss (also called softmax loss) was the most common. Both sparse categorical crossentropy and categorical cross entropy use the same loss function. If your output variable is one-hot encoded you'd use categorical cross entropy, if your output variable is integers and they're class indices, you'd use the sparse function. Binary crossentropy is used when you only have one classifier . In the function below, "n" is the number of classes, and in the case of binary cross entropy, the number of classes will be 2 because in binary classification problems you only have 2 potential outputs (classes), the output can be 0 or 1.

Keras Custom Loss Functions

One takeaway that I also noticed is that there weren't any scenarios here where a custom defined loss was used for the image classification problems. All the classification problems used one of those 3 loss functions. For the 14% of tutorials that used a custom defined function, what type of problem were they trying to solve? (These are two separate lists, you can't read left to right).

Regression Loss Functions

Now I was also interested to see which algorithms were used most frequently in the tutorial for regression problems. There were only 6 regression problems, so the sample is quite small.

It was interesting that only two of the losses were used. We did not see mean absolute percentage error, mean squared logarithmic error, cosine similarity, huber, or log-cosh. It feels good to see losses that I'm most familiar with being used in these problems, this feels so much more approachable. The MSE is squared, so it will penalize large differences between the actual and estimated more than the MAE. So if "how much" your estimate is off by isn't a big concern, you might go with MAE, if the size of the error matters, go with MSE.

Implementing Keras Loss Functions

If you're just getting started in keras, building a model looks a little different. Defining the actual loss function itself is straight forward, but we can chat about the couple lines that precede defining the loss function in the tutorial (This code is taken straight from the tutorial). In keras, there are two ways to build models, either sequential or functional. Here we're building a sequential model. The sequential model API allows you to create a deep learning model where the sequential class is created, and then you add layers to it. In the keras.sequentional() function there are the optional arguments "layers" and "name", but instead we're adding the layers piecewise.

The first model.add line that we're adding is initializing the kernel. "kernel_initializer" is defining the statistical distribution of the starting weights for your model. In this example the weights are uniformly distributed. This is a single hidden layer model. The loss function is going to be passed during the compile stage. Here the optimizer being used is adam, if you want to read more about optimizers in keras, check out Yujian's article here.

from tensorflow import keras

from tensorflow.keras import layers

model = keras.Sequential()

model.add(layers.Dense(64, kernel_initializer='uniform', input_shape=(10,)))

model.add(layers.Activation('softmax'))

loss_fn = keras.losses.SparseCategoricalCrossentropy()

model.compile(loss=loss_fn, optimizer='adam')Summary

I honestly feel better after taking a look at these computer vision tutorials. Although there was plenty of custom loss functions that I wasn't familiar with, the majority of the use cases were friendly loss functions that I was already familiar with. I also feel like I'll feel a little more confident being able to choose a loss function for computer vision problems in the future. Sometimes I can feel like things are going to be super fancy or complicated, like when "big data" was first becoming a popular buzzword, but then when I take a look myself it's less scary than I thought. If you felt like this was helpful, be sure to let me know. I'm very easily findable on LinkedIn and might make more similar articles if people find this type of non-rigorous analysis interesting.

And of course, if you're building ML models and want to be able to very easily track, compare, make your runs reproducible, I highly suggest you check out the CometML library in python or R :)

My Favorite R Programming Course

Note: This article includes affiliate links. Meaning at no cost to you (actually, you get a discount, score!) I will receive a small commission if you purchase the course.

I've been using R since 2004, long before the Tidyverse was introduced. I knew I'd benefit from fully getting up to speed on the newest packages and functionality, and I finally decided to take the plunge and fully update my skills. I wanted a course that was going to cover every nook and cranny in R. My personal experience learning R had been pasting together tutorials and reading documentation as I needed something. I wanted to be introduced to functions I may not need now, but may have a use case for in the future. I wanted everything.

I've known for a while that the Tidyverse was a massive improvement over using base R functionality for manipulating data. However, I also knew my old school skills got the job done. I have now seen the light. There is a better way. It wasn't super painful to make the move (especially since I'm familiar with programming) and the Business Science's "Business Analysis with R" course will take you from 0 to pretty dangerous in 4 weeks.

For the person with no R experience who doesn't want to bang their head on the wall for years trying to get to a "serious R user" level. I highly recommend this Business Science's "Business Analysis with R" course. Don't let the name fool you, the course spends 5 hours of using the parsnip package for machine learning techniques. And more importantly, how to communicate those results to stakeholders.

The course was thorough, clear, and concise.

Course Coverage

General:

The course takes you from the very beginning:

- Installing R

- Setting up your work environment

- full disclosure, I even learned new tips and tricks in that section

- and then straight into a relevant business analysis using transactional data

This course "holds your hand" on your journey to becoming self-sufficient in R. I couldn't possibly list everything in this article that is covered in the course, that would make no sense. However, the most life changing for me were:

- regex using stringr

- Working with strings is a different world in the Tidyverse compared to base R. I can't believe how much more difficult I had been making my life

- working with date times using lubridate

- The beginning of my career was solely in econometric time series analysis. I could have used this much earlier.

- formatting your visualizations

- This is another area where I have lost significant hours of my life that I'll never get back through the process of learning R. Matt can save you the pain I suffered.

All of the material that I would have wanted was here. All of it.

Modeling & Creating Deliverables:

Again, do not let the title of the course fool you. This course gets HEAVY into machine learning. Spending 5 HOURS in the parsnip library (it's the scikit learn of R).

The course goes through:

- K-means

- Regression & GLM

- tree methods

- XGBoost

- Support Vector Machines

And then teaches you how to create deliverables in R-markdown and interactive plots in Shiny. All in business context and always emphasizing how you'll "communicate it to the business". I can't stress enough how meticulous the layout of the course is and how much material is covered. This course is truly special.

How many tutorials or trainings have you had over the years where everything looked all "hunky dory" when you were in class? Then you try to adopt those skills and apply them to personal projects and there are huge gaping holes in what you needed to be successful. I have plenty of experience banging my head on the wall trying to get things to work with R.

Things You'll Love:

- Repetition of keyboard short-cuts so that I'll actually remember them.

- Immediately using transactional data to walk through an analysis. You're not only learning R, you're learning the applications and why the functions are relevant, immediately.

- Reference to the popular R cheatsheets and documentation. You'll leave here understanding how to read the documentation and R cheatsheets - and let's be honest, a good portion of being a strong programmer is effective googling skills. Learning to read the documentation is equivalent to teaching a man to fish.

- Matt has a nice voice. There, I said it. If you're going to listen to something for HOURS, I feel like this a relevant point to make.

For the beginner:

- Instruction starts right at the beginning and the instruction is clear.

- Code to follow along with the lecture is crazy well organized. Business Science obviously prides itself on structure.

- There is no need to take another R basics course, where you'd repeat learning a bunch of stuff that you've learned before. This one course covers everything you'll need. It. Is. Comprehensive.

- e-commerce/transactional data is an incredibly common use case. If you're not familiar with how transactional data works or you've never had to join multiple tables, this is fantastic exposure/a great use case for the aspiring data scientist.

- A slack channel with direct access to Matt (course creator) if you have any questions. I didn't personally use this feature, but as a newbie it's a tremendous value to have direct access to Matt.

I'm honestly jealous that I wasn't able to learn this way the first time, but the Tidyverse didn't even exist back then. Such is life.

The course ends with a k-means example with a deliverable that has been built in R-markdown that is stakeholder ready. This course is literally data science demystified.

In Summary:

Maybe I'm too much of a nerd. But seeing a course this well executed that provides this much value is so worth the investment to me. The speed of the transformation you'll make through taking this course is incredible. If this was available when I first started learning R I would have saved myself so much frustration. Matt Dancho (owner of Business Science) was kind enough to give me a link so that you can receive 15% off of the course. Link

The 15% off is an even better deal if you buy the bundle, but to be honest I haven't taken the 2nd course yet. I certainly will! And I'll definitely write a review afterwards to let you know my thoughts. Here is the link to the bundle: Link

If you're feeling like becoming a data science rockstar, Matt launch a brand new course and you're able to buy the 3 course bundle. The new course is "Predictive Web Applications For Business With R Shiny": Link

If you take the course, please let me know if you thought it was as amazing as I did. You can leave a testimonial in the comment or reach out to me on LinkedIn. I'd love to hear your experience!

Asking Great Questions as a Data Scientist

Asking questions can sometimes seem scary. No one wants to appear "silly." But I assure you:

- You're not silly.

- It's way more scary if you're not asking questions.

Data Science is a constant collaboration with the business and a series of questions and answers that allow you to deliver the analysis/model/data product that the business has in their head.

Questions are required to fully understand what the business wants and not find yourself making assumptions about what others are thinking.

Asking the right questions, like those you identified here is what separate Data Scientists that know 'why' from folks that only know what (tools and technologies).

-Kayode Ayankoya

We're going to answer the following questions:

- Where do we ask questions?

- What are great questions?

I had posted on LinkedIn recently about asking great questions in data science and received a ton of thought provoking comments. I will add a couple of my favorite comments/quotes throughout this article.

Where do we ask questions?

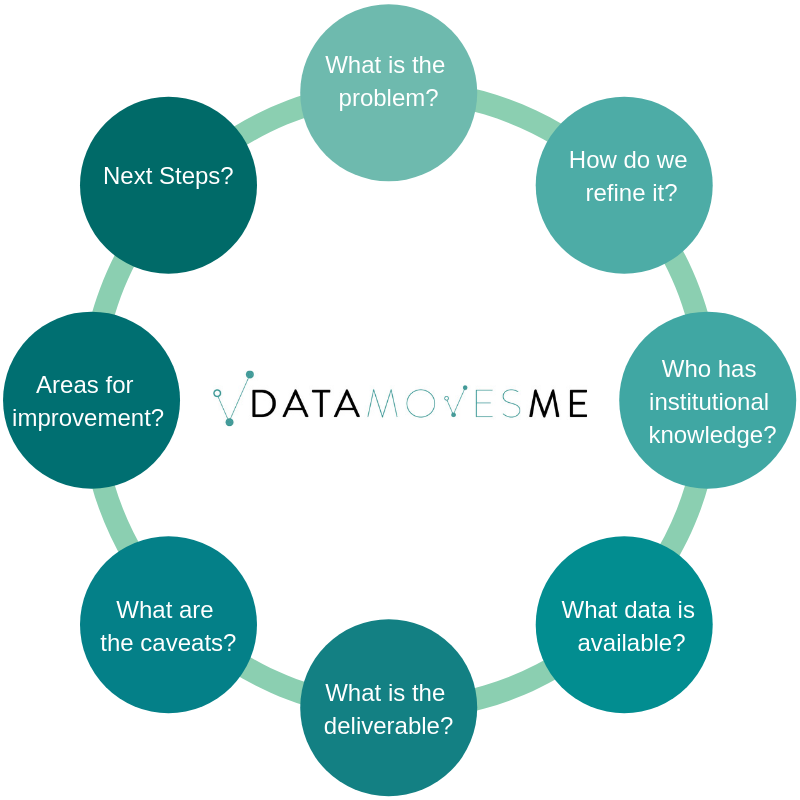

Basically every piece of the pipeline can be expressed as a question:

And each of these questions could involve a plethora of follow up questions.

To touch the tip of the iceberg, Kate Strachnyi posted a great assortment of questions that we typically ask (or want to consider) when scoping an analysis:

Few questions to ask yourself:

How will the results be used? (make business decision, invest in product category, work with a vendor, identify risks, etc)

What questions will the audience have about our analysis? (ability to filter on key segments, look at data across time to identify trends, drill-down into details, etc)

How should the questions be prioritized to derive the most value?

Who should be able to access the information? think about confidentiality/ security concerns

Do I have the required permissions or credentials to access the data necessary for analysis?

What are the different data sources, which variables do I need, and how much data will I need to get from each one?

Do I need all the data for more granular analysis, or do I need a subset to ensure faster performance?

-Kate Strachnyi

Kate's questions spanned both:

- Questions you'd ask stakeholders/different departments

- Questions you'd ask internally on the data science/analytics team.

Any of the questions above could yield a variety of answers, so it is imperative that you're asking questions. Just because you have something in your mind that is an awesome idea for approaching the problem, does not mean that other people don't similarly have awesome ideas that need to be heard an discussed. At the end of the day, data science typically functions as a support function to other areas of the business. Meaning we can't just go rogue.

In addition to getting clarification and asking questions of stakeholders of the project, you'll also want to collaborate and ask questions of those on your data science team.

Even the most seasoned data scientist will still find themselves creating a methodology or solution that isn't in their area of expertise or is a unique use case of an algorithm that would benefit from the thoughts of other data subject matter experts. Often times the person listening to your proposed methodology will just give you the thumbs up, but when you've been staring at your computer for hours there is also a chance that you haven't considered one of the underlying assumptions of your model or you're introducing bias somewhere. Someone with fresh eyes can give a new perspective and save you from realizing your error AFTER you've presented your results.

Keeping your methodology a secret until you deliver the results will not do you any favors. If anything, sharing your thoughts upfront and asking for feedback will help to ensure a successful outcome.

What are great questions?

Great questions are the ones that get asked. However, there is an art and science to asking good questions and also a learning process involved. Especially when you're starting at a new job, ask everything. Even if it's something that you believe you should already know, it's better to ask and course-correct, than to not ask. You could potentially lose hours working on an analysis and then have your boss tell you that you misunderstood the request.

It is helpful to also pose questions in a way that requires more than a "yes/no" response, so you can open up a dialogue and receive more context and information.

How we formulate the questions is also very important. I've often found that people feel judged by my questions. I have to reassure them that all I want is to understand how they work and what are their needs and that my intention is not to judge them or criticize them.

-Karlo Jimenez

I've experienced what Karlo mentioned myself. Being direct can sometimes come off as judgement. We definitely need to put on our "business acumen" hats on to the best of our ability to come across as someone who is genuinely trying to understand and deliver to their needs. I've found that if I can pose the question as "looking for their valuable feedback", it's a win-win for everyone involved.

As you build relationships with your team and stakeholders, this scenario is much less likely to occur. Once everyone realizes your personality and you've built a rapport, people will expect your line of questioning.

Follow up questions, in its various forms, are absolutely critical. Probing gives you an opportunity to paraphrase the ask and gain consensus before moving forward.

-Toby Baker

Follow-up questions feel good. When a question prompts another question you feel like you're really getting somewhere. Peeling back another layer of the onion if you will. You're collaborating, you're listening, you're in the zone.

In Summary

The main takeaway here is that there are a TON of questions you need to ask to effectively produce something that the business wants. Once you start asking questions, it'll become second nature and you'll immediately see the value and find yourself asking even more questions as you gain more experience.

Questioning has been instrumental to my career. An additional benefit is that I've found my 'voice' over the years. I feel heard in meetings and my opinion is valued. A lot of this growth has come from getting comfortable asking questions and I've also learned a ton about a given business/industry through asking these questions.

I've learned a lot about diversity of viewpoints and that people express information in different ways. This falls under the "business acumen" piece of data science that we're not often taught in school. But I hope you can go forward and fearlessly ask a whole bunch of questions.

Also published on KDNuggets: link

Life Changing Moments of DataScienceGO 2018

DataScienceGO is truly a unique conference. Justin Fortier summed up part of the ambiance when replying to Sarah Nooravi's LinkedIn post. And although I enjoy a good dance party (more than most), there were a number of reasons why this conference (in particular) was so memorable.

And although I enjoy a good dance party (more than most), there were a number of reasons why this conference (in particular) was so memorable.

- Community

- Yoga + Dancing + Music + Fantastic Energy

- Thought provoking keynotes (saving the most life changing for last)

Community:In Kirill's keynotes he mentioned that "community is king". I've always truly subscribed to this thought, but DataScienceGO brought this to life. I met amazing people, some people that I had been building relationships for months online but hadn't yet had the opportunity to meet in person, some people I connected with that I had never heard of. EVERYONE was friendly. I mean it, I didn't encounter a single person that was not friendly. I don't want to speak for others, but I got the sense that people had an easier time meeting new people than what I have seen at previous conferences. It really was a community feeling. Lots of pictures, tons of laughs, and plenty of nerdy conversation to be had.If you're new to data science but have been self conscious about being active in the community, I urge you to put yourself out there. You'll be pleasantly surprised. Yoga + Dancing + Music + Fantastic EnergyBoth Saturday and Sunday morning I attended yoga at 7am. To be fully transparent, I have a 4 year old and a 1 year old at home. I thought I was going to use this weekend as an opportunity to sleep a bit. I went home more tired than I had arrived. Positive, energized, and full of gratitude, but exhausted.Have you ever participated in morning yoga with 20-30 data scientists? If you haven't, I highly recommend it.It was an incredible way to start to the day, Jacqueline Jai brought the perfect mix of yoga and humor for a group of data scientists. After yoga each morning you'd go to the opening keynote of the day. This would start off with dance music, lights, sometimes the fog machine, and a bunch of dancing data scientists. My kind of party.The energized start mixed with the message of community really set the pace for a memorable experience.Thought provoking keynotes Ben Taylor spoke about "Leaving an AI Legacy", Pablos Holman spoke about actual inventions that are saving human lives, and Tarry Singh showed the overwhelming (and exciting) breadth of models and applications in deep learning. Since the conference I have taken a step back and have been thinking about where my career will go from here. In addition, Kirill encouraged us to think of a goal and to start taking small actions towards that goal starting today.I haven't nailed down yet how I will have a greater impact, but I have some ideas (and I've started taking action). It may be in the form of becoming an adjunct professor to educate the next wave of future mathematicians and data scientists. Or I hope to have the opportunity to participate in research that will aid in helping to solve some of the world's problems and make someone's life better.I started thinking about my impact (or using modeling for the forces of good) a couple weeks ago when I was talking with Cathy O'Neil for the book I'm writing with Kate Strachnyi "Mothers of Data Science". Cathy is pretty great at making you think about what you're doing with your life, and this could be it's own blog article. But attending DSGO was the icing on the cake in terms of forcing me to consider the impact I'm making.Basically, the take away that I'm trying to express is that this conference pushed me to think about what I'm currently doing, and to think about what I can do in the future to help others. Community is king in more ways than one.ClosingI honestly left the conference with a couple tears. Happy tears, probably provoked a bit by being so overtired. There were so many amazing speakers in addition to the keynotes. I particularly enjoyed being on the Women's panel with Gabriela de Queiroz, Sarah Nooravi, Page Piccinini, and Paige Bailey talking about our real life experiences as data scientists in a male dominated field and about the need for diversity in business in general. I love being able to connect with other women who share a similar bond and passion.I was incredibly humbled to have the opportunity to speak at this conference and also cheer for the talks of some of my friends: Rico Meinl, Randy Lao, Tarry Singh, Matt Dancho and other fantastic speakers. I spoke about how to effectively present your model output to stakeholders, similar to the information that I covered in this blog article: Effective Data Science Presentations

Yoga + Dancing + Music + Fantastic EnergyBoth Saturday and Sunday morning I attended yoga at 7am. To be fully transparent, I have a 4 year old and a 1 year old at home. I thought I was going to use this weekend as an opportunity to sleep a bit. I went home more tired than I had arrived. Positive, energized, and full of gratitude, but exhausted.Have you ever participated in morning yoga with 20-30 data scientists? If you haven't, I highly recommend it.It was an incredible way to start to the day, Jacqueline Jai brought the perfect mix of yoga and humor for a group of data scientists. After yoga each morning you'd go to the opening keynote of the day. This would start off with dance music, lights, sometimes the fog machine, and a bunch of dancing data scientists. My kind of party.The energized start mixed with the message of community really set the pace for a memorable experience.Thought provoking keynotes Ben Taylor spoke about "Leaving an AI Legacy", Pablos Holman spoke about actual inventions that are saving human lives, and Tarry Singh showed the overwhelming (and exciting) breadth of models and applications in deep learning. Since the conference I have taken a step back and have been thinking about where my career will go from here. In addition, Kirill encouraged us to think of a goal and to start taking small actions towards that goal starting today.I haven't nailed down yet how I will have a greater impact, but I have some ideas (and I've started taking action). It may be in the form of becoming an adjunct professor to educate the next wave of future mathematicians and data scientists. Or I hope to have the opportunity to participate in research that will aid in helping to solve some of the world's problems and make someone's life better.I started thinking about my impact (or using modeling for the forces of good) a couple weeks ago when I was talking with Cathy O'Neil for the book I'm writing with Kate Strachnyi "Mothers of Data Science". Cathy is pretty great at making you think about what you're doing with your life, and this could be it's own blog article. But attending DSGO was the icing on the cake in terms of forcing me to consider the impact I'm making.Basically, the take away that I'm trying to express is that this conference pushed me to think about what I'm currently doing, and to think about what I can do in the future to help others. Community is king in more ways than one.ClosingI honestly left the conference with a couple tears. Happy tears, probably provoked a bit by being so overtired. There were so many amazing speakers in addition to the keynotes. I particularly enjoyed being on the Women's panel with Gabriela de Queiroz, Sarah Nooravi, Page Piccinini, and Paige Bailey talking about our real life experiences as data scientists in a male dominated field and about the need for diversity in business in general. I love being able to connect with other women who share a similar bond and passion.I was incredibly humbled to have the opportunity to speak at this conference and also cheer for the talks of some of my friends: Rico Meinl, Randy Lao, Tarry Singh, Matt Dancho and other fantastic speakers. I spoke about how to effectively present your model output to stakeholders, similar to the information that I covered in this blog article: Effective Data Science Presentations  This article is obviously an over simplification of all of the awesomeness that happened during the weekend. But if you missed the conference, I hope this motivates you to attend next year so that we can meet. And I urge you to watch the recordings and reflect on the AI legacy you want to leave behind.I haven't seen the link to the recordings from DataScienceGo yet, but when I find them I'll be sure to link here.

This article is obviously an over simplification of all of the awesomeness that happened during the weekend. But if you missed the conference, I hope this motivates you to attend next year so that we can meet. And I urge you to watch the recordings and reflect on the AI legacy you want to leave behind.I haven't seen the link to the recordings from DataScienceGo yet, but when I find them I'll be sure to link here.

Setting Your Hypothesis Test Up For Success

Setting up your hypothesis test for success as a data scientist is critical. I want to go deep with you on exactly how I work with stakeholders ahead of launching a test. This step is crucial to make sure that once a test is done running, we'll actually be able to analyze it. This includes:

A well defined hypothesis

A solid test design

Knowing your sample size

Understanding potential conflicts

Population criteria (who are we testing)

Test duration (it's like the cousin of sample size)

Success metrics

Decisions that will be made based on results

This is obviously a lot of information. Before we jump in, here is how I keep it all organized:I recently created a google doc at work so that stakeholders and analytics could align on all the information to fully scope a test upfront. This also gives you (the analyst/data scientist) a bit of an insurance policy. It's possible the business decides to go with a design or a sample size that wasn't your recommendation. If things end up working out less than stellar (not enough data, design that is basically impossible to analyze), you have your original suggestions documented.In my previous article I wrote:

"Sit down with marketing and other stakeholders before the launch of the A/B test to understand the business implications, what they’re hoping to learn, who they’re testing, and how they’re testing. In my experience, everyone is set up for success when you’re viewed as a thought partner in helping to construct the test design, and have agreed upon the scope of the analysis ahead of launch."

Well, this is literally what I'm talking about:This document was born of things that we often see in industry:HypothesisI've seen scenarios that look like "we're going to make this change, and then we'd like you to read out on the results". So, your hypothesis is what? You're going to make this change, and what do you expect to happen? Why are we doing this? A hypothesis clearly states the change that is being made, the impact you expect it to have, and why you think it will have that impact. It's not an open-ended statement. You are testing a measurable response to a change. It's ok to be a stickler, this is your foundation.Test DesignThe test design needs to be solid, so you'll want to have an understanding of exactly what change is being made between test and control. If you're approached by a stakeholder with a design that won't allow you to accurately measure criteria, you'll want to coach them on how they could design the test more effectively to read out on the results. I cover test design a bit in my article here.Sample SizeYou need to understand the sample size ahead of launch, and your expected effect size. If you run with a small sample and need an unreasonable effect size for it to be significant, it's most likely not worth running. Time to rethink your sample and your design. Sarah Nooravi recently wrote a great article on determining sample size for a test. You can find Sarah's article here.

An example might be that you want to test the effect of offering a service credit to select customers. You have a certain budget worth of credits you're allowed to give out. So you're hoping you can have 1,500 in test and 1,500 in control (this is small). The test experience sees the service along with a coupon, and the control experience sees content advertising the service but does not see any mention of the credit. If the average purchase rate is 13.3% you would need a 2.6 point increase (15.9%) in the control to see significance at 0.95 confidence. This is a large effect size that we probably won't achieve (unless the credit is AMAZING). It's good to know these things upfront so that you can make changes (for instance, reduce the amount of the credit to allow for additional sample size, ask for extra budget, etc).

Potential Conflicts:It's possible that 2 different groups in your organization could be running tests at the same time that conflict with each other, resulting in data that is junk for potentially both cases. (I actually used to run a "testing governance" meeting at my previous job to proactively identify these cases, this might be something you want to consider).

An example of a conflict might be that the acquisition team is running an ad in Google advertising 500 business cards for $10. But if at the same time this test was running another team was running a pricing test on the business card product page that doesn't respect the ad that is driving traffic, the acquisition team's test is not getting the experience they thought they were! Customers will see a different price than what is advertised, and this has negative implications all around.

It is so important in a large analytics organization to be collaborating across teams and have an understanding of the tests in flight and how they could impact your test.

Population criteria: Obviously you want to target the correct people. But often I've seen criteria so specific that the results of the test need to be caveated with "These results are not representative of our customer base, this effect is for people who [[lists criteria here]]." If your test targeted super performers, you know that it doesn't apply to everyone in the base, but you want to make sure it is spelled out or doesn't get miscommunicated to a more broad audience.

Test duration: This is often directly related to sample size. (see Sarah's article) You'll want to estimate how long you'll need to run the test to achieve the required sample size. Maybe you're randomly sampling from the base and already have sufficient population to choose from. But often we're testing an experience for new customers, or we're testing a change on the website and we need to wait for traffic to visit the site and view the change. If it's going to take 6 months of running to get the required sample size, you probably want to rethink your population criteria or what you're testing. And better to know that upfront.

Success Metrics: This is an important one to talk through. If you've been running tests previously, I'm sure you've had stakeholders ask you for the kitchen sink in terms of analysis.If your hypothesis is that a message about a new feature on the website will drive people to go see that feature; it is reasonable to check how many people visited that page and whether or not people downloaded/used that feature. This would probably be too benign to cause cancellations, or effect upsell/cross-sell metrics, so make sure you're clear about what the analysis will and will not include. And try not to make a mountain out of a molehill unless you're testing something that is a dramatic change and has large implications for the business.

Decisions! Getting agreement ahead of time on what decisions will be made based on the results of the test is imperative.Have you ever been in a situation where the business tests something, it's not significant, and then they roll it out anyways? Well then that really didn't need to be a test, they could have just rolled it out. There are endless opportunities for tests that will guide the direction of the business, don't get caught up in a test that isn't actually a test.

Conclusion: Of course, each of these areas could have been explained in much more depth. But the main point is that there are a number of items that you want to have a discussion about before a test launches. Especially if you're on the hook for doing the analysis, you want to have the complete picture and context so that you can analyze the test appropriately.I hope this helps you to be more collaborative with your business partners and potentially be more "proactive" rather than "reactive".

No one has any fun when you run a test and then later find out it should have been scoped differently. Adding a little extra work and clarification upfront can save you some heartache later on. Consider creating a document like the one I have pictured above for scoping your future tests, and you'll have a full understanding of the goals and implications of your next test ahead of launch. :)

Effective Data Science Presentations

If you're new to the field of Data Science, I wanted to offer some tips on how to transition from presentations you gave in academia to creating effective presentations for industry.Unfortunately, if your background is of the math, stats, or computer science variety, no one probably prepared you for creating an awesome data science presentations in industry. And the truth is, it takes practice. In academia, we share tables of t-stats and p-values and talk heavily about mathematical formulas. That is basically the opposite of what you'd want to do when presenting to a non-technical audience.If your audience is full of a bunch of STEM PhD's then have at it, but in many instances we need to adjust the way we think about presenting our technical material.I could go on and on forever about this topic, but here we'll cover:

Talking about model output without talking about the model

Painting the picture using actual customers or inputs

Putting in the Time to Tell the Story

Talking about model output without talking about the modelCertain models really lend themselves well to this. Logistic regression, decision trees, they're just screaming to be brought to life.You don't want to be copy/pasting model output into your data science presentations. You also don't want to be formatting the output into a nice table and pasting it into your presentation. You want to tell the story and log odds certainly are not going to tell the story for your stakeholders.A good first step for a logistic regression model would just be to exponentiate the log odds so that you're at least dealing in terms of odds. Since this output is multiplicative, you can say:"For each unit increase of [variable] we expect to see a lift of x% on average with everything else held constant."So instead of talking about technical aspects of the model, we're just talking about how the different drivers effect the output.

We could, however, take this one step further.

Using Actual Customers to Paint the Picture: I love using real-life use cases to demonstrate how the model is working. Above we see something similar to what I presented when talking about my seasonality model. Of course I changed his name for this post, but in the presentation I would talk about this person's business, why it's seasonal, show the obvious seasonal pattern, and let them know that the model classified this person as seasonal. I'm not talking about fourier transforms, I'm describing how real people are being categorized and how we might want to think about marketing to them. Digging in deep like this also helps me to better understand the big picture of what is going on. We all know that when we dig deeper we see some crazy behavioral patterns.Pulling specific customers/use cases works for other types of models as well. You built a retention model? Choose a couple people with a high probability of churning, and a couple with a low probability of churning and talk about those people."Mary here has been a customer for a long time, but she has been less engaged recently and hasn't done x, y, or z (model drivers), so the probability of her cancelling her subscription is high, even though customers with longer tenure are usually less likely to leave.

Putting in the Time to Tell the Story: As stated before, it takes some extra work to put these things together. Another great example is in cluster analysis. You could create a slide for each attribute, but then people would need to comb through multiple slides to figure out WHO cluster 1 really is vs. cluster 2, etc. You want to aggregate all of this information for your consumer. And I'm not above coming up with cheesy names for my segments, it just comes with the territory :).It's worth noting here that if I didn't aggregate all this information by cluster, I also wouldn't be able to speak at a high level about who was actually getting into these different clusters. That would be a large miss on my behalf, because at the end of the day, your stakeholders want to understand the big picture of these clusters.Every analysis I present I spend time thinking about what the appropriate flow should be for the story the data can tell.

I might need additional information like market penetration by geography, (or anything, the possibilities are endless). The number of small businesses by geography may not have been something I had in my model, but with a little google search I can find it. Put in the little extra work to do the calculation for market penetration, and then create a map and use this information to further support my story. Or maybe I learn that market penetration doesn't support my story and I need to do more analysis to get to the real heart of what is going on. We're detectives. And we're not just dealing with the data that is actually in the model. We're trying to explore anything that might give interesting insight and help to tell the story. Also, if you're doing the extra work and find your story is invalidated, you just saved yourself some heartache. It's way worse when you present first, and then later realize your conclusions were off. womp womp.

Closing comments: Before you start building a model, you were making sure that the output would be actionable, right? At the end of your presentation you certainly want to speak to next steps on how your model can be used and add value whether that's coming up with ideas on how you can communicate with customers in a new way that you think they'll respond to, reduce retention, increase acquisition, etc. But spell it out. Spend the time to come up with specific examples of how someone could use this output.I'd also like to mention that learning best practices for creating great visualizations will help you immensely.

There are two articles by Kate Strachnyi that cover pieces of this topic. You can find those articles here and here. If you create a slide and have trouble finding what the "so what?" is of the slide, it probably belongs in the appendix. When you're creating the first couple decks of your career it might crush you to not include a slide that you spent a lot of time on, but if it doesn't add something interesting, unfortunately that slide belongs in the appendix.I hope you found at least one tip in this article that you'll be able to apply to your next data science presentation. If I can help just one person create a kick-ass presentation, it'll be worth it.

Up-Level Your Data Science Resume - Getting Past ATS

This series is going to dive into the tip of the iceberg on how to create an effective resume that gets calls. When I surveyed my email list, the top three things that people were concerned about regarding their resumes were:

- Being able to get past ATS (Applicant Tracking System)

- Writing strong impactful bullet points instead of listing “job duties”

- How to position yourself when you haven’t had a Data Science job previously

This article is the first part of a three-part series that will cover the above mentioned topics. Today we’re going to cover getting past ATS.

If you’re not familiar with ATS, it stands for Applicant Tracking System. If you’re applying directly on a website for a position, and the company is medium to large, it’s very likely that your resume will be subject to ATS before:

1. Your resume lands in the inbox of HR

2. You receive an automated email that looks like this:

It’s hard to speak for all ATS systems, because there are many of them. Just check out the number of ATS systems that indeed.com integrates with https://www.indeed.com/hire/ats-integration.

So how do you make sure you have a good chance of getting past ATS?

1. Make it highly likely that your resume is readable by ATS

2. Make it keyword rich, since ATS is looking for keywords specific to the job

Being readable by ATS:

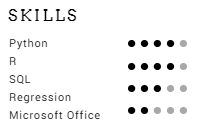

There has been a movement lately to create these gorgeously designed resumes. You’ll see people “Tableau-ize” their resume (ie — creating a resume using Tableau), include logos, or include charts that are subjective graphs of their level of knowledge in certain skill sets. An example of one of these charts looks like this:

ATS is not going to know what to do with those dots, just as it wouldn’t know what to do with a logo, your picture, or a table; do not use them. To test if your resume is going to be parsed well by ATS, try copying the document and pasting it in word. Is it readable? Or is there a bunch of other stuff? You can also try saving it as plain text and see what it looks like.

As data-loving story tellers, I understand the desire to want to show that you’re able to use visualizations to create an aesthetically appealing resume. And if you’re applying through your network, and not on a company website, maybe you’d consider these styles. I’m not going to assume I know your network and what they’re looking for. And of course, you can have multiple copies of your resume that you choose to use for specific situations.

What is parsable:

I’ve seen a number of blog posts in the data world saying things to the tune of “no one wants to see one of those boring old resumes.” However, those boring resumes are likely to score higher in ATS, because the information is parsable. And you can create an aesthetically pleasing, classic resume.

Some older ATS systems will only parse .doc or .docx formats, others will be able to parse .pdf, but not all elements of the .pdf will be readable if you try to use the fancy image types mentioned above.

Making your resume rich with keywords:

This comes in 2 forms:

1. Making sure that the skills mentioned in these job descriptions are specifically called out on your resume using the wording from the JD.

2. Reducing the amount of “fluff” content on your resume. If your bullets are concise, the ratio of keywords to fluff will be higher and will help you score better.

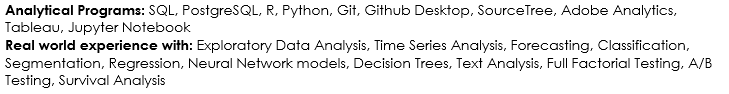

For point 1, I specifically mention my skills at the top of my resume:

I also make a point to specifically mention these programs and skills where applicable in the bullet points in my resume. If a job description calls for logistic regression, I would add logistic regression specifically to my resume. If the JD calls for just “regression,” I’ll leave this listed as regression on my resume. You get the idea.

It's also important to note that more than just technical skills matter when reading a job description. Companies are looking for employees who can also:

- communicate with the business

- work cross-functionally

- explain results at the appropriate level for the audience that is receiving the information.

If you’re applying for a management position, you’re going to be scored on keywords that are relevant to qualities that are expected of a manager. The job description is the right place to start to see what types of qualities they’re looking for. I’ll have highlighted specific examples in my resume course I’m launching soon.

For point 2, you want to make your bullet points as concise as possible. Typically starting with a verb, mentioning the action, and the result. This will help you get that ratio of “keywords:everything” as high as possible.

In my next article in this series I'm sharing tips on how to position yourself for a job change. That article is here.

Favorite MOOCs for Data Scientists

I had asked on LinkedIn recently about everyone’s favorite MOOCs in data science. This post started a lot of great discussion around the courses (and course platforms) that people had experience with. Certain courses were mentioned multiple times and were obviously being recommended by the community.Here was the post:Biggest takeaway:

Anything by Kirill Eremenko or Andrew NG were highly regarded and mentioned frequently.

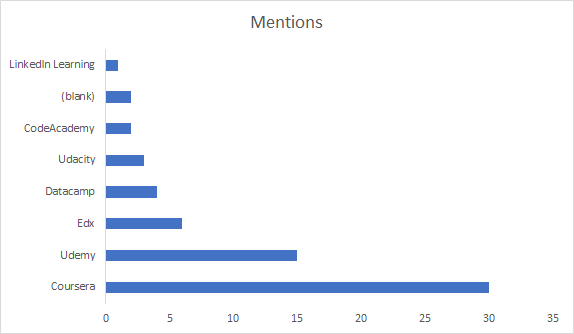

So I decided to revisit this post, and aggregate the information that was being shared so that people who are looking for great courses to build their data science toolkit can use this post as a starting point.You’ll notice that below Coursera had the most mentions, this is mostly driven by Andrew Ng’s Machine learning course (11 mentions for that course alone) and Python For Everybody (6 mentions, also on Coursera). Similarly, Kirill has a number of courses on Udemy that all had multiple mentions, giving Udemy a high number of mentions in the comments as well. (Links to courses are lower in this article).The 2 blanks were due to one specific course. “Statistical Learning in R” it is a Stanford course. Unfortunately I wasn’t able to find it online. Maybe someone can help out by posting where to find the course in the comments?

Update! Tridib Dutta and Sviatoslav Zimine reached out within minutes of this article going live to share the link for the Stanford Course. There was also an Edx course that was recommended that is not currently available, “Learning From Data (Introductory Machine Learning)" so I won’t be linking to that one.

If you’re familiar with MOOCs, a number of platforms allow you to audit the course (i.e. watch the videos and read the materials for free) so definitely check into that option if you’re not worried about getting graded on your quizzes.To make the list, a course had to be recommended by at least 2 people (with the exception of courses covering SQL and foundational math for machine learning, since those didn’t have a lot of mentions, but the topics are pretty critical :).I've organized links to the courses that were mentioned by topic. Descriptions of courses are included when they were conveniently located on the website.

Disclaimer: Some of these links are affiliate links, meaning that at no cost to you, I’ll receive a commission if you buy the course.

SQL:

“Sabermetrics 101: Introduction to Baseball Analytics — Edx”“An introduction to sabermetrics, baseball analytics, data science, the R Language, and SQL.”

“Data Foundations” — Udacity“Start building your data skills today by learning to manipulate, analyze, and visualize data with Excel, SQL, and Tableau.”

Math:

“Mathematics for Machine Learning Specialization” — Coursera“Mathematics for Machine Learning. Learn about the prerequisite mathematics for applications in data science and machine learning.”

Tableau:

“Tableau 10 A-Z: Hands-On Tableau Training for Data Science!” — Udemy (This is a Kirill Eremenko course)

R:

“R Programming” — Coursera “The course covers practical issues in statistical computing which includes programming in R, reading data into R, accessing R packages, writing R functions, debugging, profiling R code, and organizing and commenting R code.”

"R Programming A-Z™: R For Data Science With Real Exercises!" — Udemy (This is a Kirill Eremenko course)"Learn Programming In R And R Studio. Data Analytics, Data Science, Statistical Analysis, Packages, Functions, GGPlot2"

If you're looking for the best R course that has ever existed, read about my favorite R programming course. I wouldn't call it a MOOC, because you have direct access to the instructor through Slack. But if you're serious about learning R, check this out. Link

Python:

“Python for Everybody Specialization” — Coursera“will introduce fundamental programming concepts including data structures, networked application program interfaces, and databases, using the Python programming language.”

Python for Data Science:

“Applied Data Science With Python Specialization” — Coursera

“Python for Data Science” — Edx “Learn to use powerful, open-source, Python tools, including Pandas, Git and Matplotlib, to manipulate, analyze, and visualize complex datasets.”

Machine Learning:

“Machine Learning” — Coursera (This is an Andrew Ng course)

“Machine Learning A-Z™: Hands-On Python & R In Data Science” — Udemy (This is a Kirill Eremenko course)

“Python for Data Science and Machine Learning Bootcamp”— Udemy “Learn how to use NumPy, Pandas, Seaborn , Matplotlib , Plotly , Scikit-Learn , Machine Learning, Tensorflow , and more!”

Deep Learning:

“Deep Learning Specialization” — Coursera (This is an Andrew Ng course)" In five courses, you will learn the foundations of Deep Learning, understand how to build neural networks, and learn how to lead successful machine learning projects. You will learn about Convolutional networks, RNNs, LSTM, Adam, Dropout, BatchNorm, Xavier/He initialization, and more.”No one had anything bad to say about any particular course, however, some people did have preferences in terms of platforms. You can read the original post yourself here.I hope these courses help you widdle down the plethora of options (it’s overwhelming!) and I hope you learn some great new information that you can apply in your career. Happy learning!

Favorite MOOCs for Data Scientists. Data science courses. Data Science resources. Data analysis, data collection, data management, data tracking, data scientist, data science, big data, data design, data analytics, behavior data collection, behavior data, data recovery, data analyst. For more on data science, visit www.datamovesme.com

How Blogging Helps You Build a Community in Data Science

Holy Moly. I started blogging in March and it has opened my eyes.I want to start off by saying that I didn't magically come up with this idea of blogging on my own. I noticed my friend Jonathan Nolis becoming active on LinkedIn, so I texted them to get the scoop. They told me to start a blog and jokingly said "I'm working on my #brand". I'm the type of person to try anything once, plus I already owned a domain name, had a website builder (from working at Vistaprint), and I have an email marketing account (because I work for Constant Contact). So sure, why not? If you're thinking about starting a blog. Know that you do not need to have a bunch of tools already at your disposal. If needed, you can create articles on LinkedIn or Medium. There are many options to try before investing a penny . . . but of course, you can go ahead and create your own site.

I have since moved to self-hosted Wordpress. I've fallen in love with blogging, and Wordpress lets me take advantage of lots of extra functionality.With my first post, my eyes started to open up to all the things that other members of the Data Science community were doing. And honestly, if you had asked me about who I most looked up to in Data Science prior to starting my blog, I'd probably just rattle off people who have created R packages that have made my life easier, or people who post a lot of answers to questions on Stack Overflow. But now I was paying attention on LinkedIn and Twitter, and seeing the information that big data science influencers like Kirk Borne, Carla Gentry, Bernard Marr, and many others (seriously, so many others) were adding to the community.

I also started to see first hand the amount of people that were studying to become a data scientist (yay!). Even people who are still in school or very early in their careers are participating by being active in the data science community. (You don't need to be a pro, just hop in). If you're looking for great courses to take in data science, these ones have been highly recommended by the community here.I've paid attention to my blog stats (of course, I'm a data nerd), and have found that the articles that I write that get the biggest response are either:

Articles on how to get into data science

Coding demos on how to perform areas of data science

But you may find that something different works for you and your style of writing. I don't just post my articles on LinkedIn. I also post on Twitter, Medium, I send them to my email list, and I put them on Pinterest. I balked when someone first mentioned the idea of Pinterest for data science articles. It's crazy, but Pinterest is the largest referrer of traffic to my site. Google Analytics isn't lying to me.

I've chatted with so many people in LinkedIn messaging, I've had the opportunity to speak with and (virtually) meet some awesome people who are loving data and creating content around data science. I'm honestly building relationships and contributing to a community, it feels great. If you're new to the "getting active in the data science community on LinkedIn" follow Tarry Singh, Randy Lao, Kate Strachnyi, Favio Vazquez, Beau Walker, Eric Weber, and Sarah Nooravi just to name a few. You'll quickly find your tribe if you put yourself out there. I find that when I participate, I get back so much more than I've put in.Hitting "post" for the very first time on content you've created is intimidating, I'm not saying that this will be the easiest thing you ever do. But you will build relationships and even friendships of real value with people who have the same passion. If you start a blog, I look forward to reading your articles and watching your journey.

Building community in data science through blogging. Data analysis, data collection , data management, data tracking, data scientist, data science, big data, data design, data analytics, behavior data collection, behavior data, data recovery, data analyst. For more on data science, visit www.datamovesme.com

Beginning the Data Science Pipeline - Meetings

I spoke in a Webinar recently about how to get into Data Science. One of the questions asked was "What does a typical day look like?" I think there is a big opportunity to explain what really happens before any machine learning takes place for a large project. I've previously written about thinking creatively for feature engineering, but there is even more to getting ready for a data science project, you need to get buy in on the project from other areas of the business to ensure you're delivery insights that the business wants and needs.It may be that the business has a high priority problem for you to solve, but often you'll identify projects with a high ROI and want to show others the value you could provide if you were given the opportunity to work on the project you've come up with.The road to getting to the machine learning algorithm looks something like:

Plenty of meetings

Data gathering (often from multiple sources)

Exploratory data analysis

Feature engineering

Researching the best methodology (if it's not standard)

Machine learning

We're literally going to cover the 1st bullet here in this article. There are a ton of meetings that take place before I ever write a line of SQL for a big project. If you read enough comments/blogs about Data Science, you'll see people say it's 90% data aggregation and 10% modeling (or some other similar split), but that's also not quite the whole picture. I'd love for you to fully understand what you're signing up for when you become a data scientist.

Meetings: As I mentioned, the first step is really getting buy in on your project. It's important that as an Analytics department, we're working to solve the needs of the business. We want to help the rest of the business understand the value that a project could deliver, through pitching the idea in meetings with these stakeholders. Just to be clear, I'm also not a one woman show. My boss takes the opportunity to talk about what we could potentially learn and action on with this project whenever he gets the chance (in additional meetings). After meetings at all different levels with all sorts of stakeholders, we might now have agreement that this project should move forward.

More Meetings: At this point I'm not just diving right into SQL. There may be members of my team who have ideas for data that I'm not aware of that might be relevant. Other areas of the business can also help give inputs into what variables might be relevant (they don't know they database, but they have the business context, and this project is supposed to SUPPORT their work).There is potentially a ton of data living somewhere that has yet to be analyzed, the databases of a typical organization are quite large, unless you've been at a company for years, there is most likely useful data that you are not aware of.

The first step was meeting with my team to discuss every piece of data that we could think of that might be relevant. Thinking of things like:

If something might be a proxy for customers who are more "tech savvy". Maybe this is having a business email address as opposed to a gmail address (or any non-business email address), or maybe customers who utilize more advanced features of our product are the ones we'd consider tech savvy. It all depends on context and could be answered in multiple ways. It's an art.

Census data could tell us if a customers zip code is in a rural or urban area? Urban or rural customers might have different needs and behave differently, maybe the extra work to aggregate by rural/urban isn't necessary for this particular project. Bouncing ideas off other and including your teammates and stakeholders will directly impact your effectiveness.

What is available in the BigData environment? In the Data Warehouse? Other data sources within the company. When you really look to list everything, you find that this can be a large undertaking and you'll want the feedback from others.

After we have a list of potential data to find, then the meetings start to help track all that data down. You certainly don't want to reinvent the wheel here. No one gets brownie points for writing all of the SQL themselves when it would have taken you half the time if you leveraged previously written queries from teammates. If I know of a project where someone had already created a few cool features, I email them and ask for their code, we're a team. For a previous project I worked on, there were 6 different people outside of my team that I needed to connect with who knew these tables or data sources better than members of my team. So it's time to ask those other people about those tables, and that means scheduling more meetings.

Summary: I honestly enjoy this process, it's an opportunity to learn about the data we have, work with others, and think of cool opportunities for feature engineering. The mental picture is often painted of data scientists sitting in a corner by themselves, for months, and then coming back with a model. But by getting buy in, collaborating with other teams, and your team members, you can keep stakeholders informed through the process and feel confident that you'll deliver what they're hoping. You can be a thought partner that is proactively delivering solutions.

Tips for starting a data science project. Data analysis, data collection , data management, data tracking, data scientist, data science, big data, data design, data analytics, behavior data collection, behavior data, data recovery, data analyst. For more on data science, visit www.datamovesme.com.

How to Ace the In-Person Data Science Interview

I’ve written previously about my recent data science job hunt, but this article is solely devoted to the in-person interview. That full-day, try to razzle-dazzle em’, cross your fingers and hope you’re well prepared for what gets thrown at you. After attending a ton of these interviews, I’ve found that they tend to follow some pretty standard schedules.

But first, if your sending out job applications and aren't hearing back, you'll want to take a second look at your resume. I've written a couple articles on how to create a strong resume. One helpful article is

You may meet with 3–7 different people, and throughout the span of meeting with these different people, you’ll probably cover:

Tell me about yourself

Behavioral interview questions

“White boarding” SQL

“White boarding” code (technical interview)

Talking about items on your resume

Simple analysis interview questions

Asking questions of your own

Tell me about yourselfI’ve mentioned this before when talking about phone screens. The way I approach this never changes. People just want to hear that you can speak to who you are and what you’re doing. Mine was some variation of:I am a Data Scientist with 8 years of experience using statistical methods and analysis to solve business problems across various industries. I’m skilled in SQL, model building in R, and I’m currently learning Python.

Behavioral Questions

Almost every company I spoke with asked interview questions that should be answered in the STAR format. The most prevalent STAR questions I’ve seen in Data Science interviews are:

Tell me about a time you explained technical results to a non-technical person

Tell me about a time you improved a process

Tell me about a time with a difficult stakeholder, and how was it resolved

The goal here is to concisely and clearly explain the Situation, Task, Action and Result. My response to the “technical results” questions would go something like this:Vistaprint is a company that sells marketing materials for small businesses online (always give context, the interviewer may not be familiar with the company). I had the opportunity to do a customer behavioral segmentation using k-means. This involved creating 54 variables, standardizing the data, plenty of analysis, etc. When it was time to share my results with stakeholders, I had really taken this information up a level and built out the story. Instead of talking about the methodology, I spoke to who the customer segments were and how their behaviors were different. I also stressed that this segmentation was actionable! We could identify these customers in our database, develop campaigns to target them, and I gave examples of specific campaigns we might try. This is an example of when I explained technical results to non-technical stakeholders. (always restate the question afterwards).For me, these questions required some preparation time. I gave some real thought to my best examples from my experience, and practiced saying the answer. This time paid-off. I was asked these same questions over and over throughout my interviewing.

White Boarding:

white boarding Interview

White Boarding SQL

This is when the interviewer has you stand at the whiteboard an answer some SQL questions. In most scenarios, they’ll tape a couple pieces of paper up on the whiteboard. I have a free video course on refreshing SQL for the data science interview

White Boarding Code

As mentioned in my previous article. I was asked FizzBuzz two days in a row by two different companies. A possible way to write the solution (just took a screenshot of my computer) is below:

fizzbuzz Interview coding

The coding problem will most likely involve some loops, logic statements and may have you define a function. The hiring manager just wants to be sure that when you say you can code, you at least have some basic programming knowledge.

Items on Your Resume

I’ve been asked about all the methods I mention on my resume at one point or another (regression, classification, time-series analysis, MVT testing, etc). I don’t mention my thesis from my Master’s Degree on my resume, but casually referenced it when asked if I had previously had experience with Bayesian methods.

The interviewer followed up with a question on the prior distributions used in my thesis.

I had finished my thesis 9 years ago, couldn’t remember the priors and told him I’d need to follow up.

I did follow up and send him the answer to his question, they did offer me a job, but it’s not a scenario you want to find yourself in. If you are going to reference something, be able to speak to it. Even if it means refreshing your memory by looking at wikipedia ahead of the interview. Things on your resume and projects you mention should be a home run.

Simple Analysis Questions

Some basic questions will be asked to make sure that you have an understanding of how numbers work. The question may require you to draw a graph or use some algebra to get at an answer, and it’ll show that you have some business context and can explain what is going on. Questions around changes in conversion, average sale price, why is revenue down in this scenario? What model would you choose in this scenario? Typically I’m asked two or three questions of this type.

I was asked a probability question at one interview. They asked what the expected value was of rolling a fair die. I was then asked if the die was weighted in a certain way, what would the expected value of that die be. I wasn’t allowed to use a calculator.

Interview questions

Questions I asked:

Tell me about the behaviors of a person that you would consider a high-performing/high-potential employee.

Honestly, I used the question above to try and get at whether you needed to work 60 hours a week and work on the weekends to be someone who stood out. I pretty frequently work on the weekends because I enjoy what I do, I wouldn’t enjoy it if it was expected.

What software are you using?

Really, I like to get this question out of the way during the phone screen. I’m not personally interested in working for a SAS shop, so I’d want to know that upfront. My favorite response to this question is “you can use whatever open source tools you’d like as long as it’s appropriate for the problem.”

Is there anything else I can tell you about my skills and qualifications to let you know that I am a good fit for this job?

This is your opportunity to let them tell you if there is anything that you haven’t covered yet, or that they might be concerned about. You don’t want to leave an interview with them feeling like they didn’t get EVERYTHING they needed to make a decision on whether or not to hire you.

When can I expect to hear from you?

I also ask about the reporting structure, and I certainly ask about what type of projects I’d be working on soon after starting (if that is not already clear).

Summary

I wish you so much success in your data science interviews. Hopefully you meet a lot of great people, and have a positive experience. After each interview, remember to send your thank you notes! If you do not receive an offer, or do not accept an offer from a given company, still go on LinkedIn and send them connection requests. You never know when timing might be better in the future and your paths might cross.

To read about my job hunt from the first application until I accepted an offer,

.

How to ace the data science in-person interview. Data analysis, data collection , data management, data tracking, data scientist, data science, big data, data design, data analytics, behavior data collection, behavior data, data recovery, data analyst. For more on data science, visit www.datamovesme.com.

The Successful Data Science Job Hunt

The point of this article is to show you what a successful Data Science job hunt looks like, from beginning to end. Strap-in, friends. I’m about to bring you from day 1 of being laid-off to the day that I accepted an offer. Seriously, it was an intense two months.I have an MS in Statistics and have been working in Advanced Analytics since 2010. If you’re new to the field, your experience may be different, but hopefully you’ll be able to leverage a good amount of this content.We’re going to cover how I leveraged LinkedIn, keeping track of all the applications, continuing to advance your skills while searching, what to do when you receive an offer, and how to negotiate.

Day 1 Being Laid-off

dyed my hair bright pink before job hunting

Vistaprint decided to decrease it’s employee headcount by $20 million dollars in employee salary, I was part of that cut. I was aware that the market was hot at the moment, so I was optimistic from day 1. I received severance, and this was an opportunity to give some real thought about what I would like my next move to be.I happened to get laid-off 4 days after I had just dyed my hair bright pink for the first time, that was a bummer.I actually went to one job interview with my pink hair, and they loved it. However, I did decide to bring my hair back to a natural color for the rest of my search.

Very First Thing I Did:

I am approached by recruiters pretty frequently on LinkedIn. I always reply.Although if you’re just getting into the field, you may not have past messages from recruiters in your LinkedIn mail, but I mention this so that you can start to do this throughout the rest of your career.Now that I was looking, my first action was to go through that list, message everyone and say:“Hi (recruiter person), I’m currently looking for a new opportunity. If there are any roles you’re looking to fill that would be a good fit, I’d be open to a chat.”

There were a number of people that replied back saying they had a role, but after speaking with them, it didn’t seem like the perfect fit for me at the moment.In addition to reaching out to the recruiters who had contacted me, I also did a google search (and a LinkedIn hunt) to find recruiters in the analytics space. I reached out to them as well to let them know I was looking. You never know who might know of something that isn’t on the job boards yet, but is coming on soon.

First Meeting With the Career Coach

As part of the layoff, Vistaprint set me up with a career coach. The information she taught me was incredibly valuable, I’ll be using her tips throughout my career. I met with Joan Blake from Transition Solutions. On our first meeting, I brought my resume and we talked about what I was looking for in my next role.Because my resume and LinkedIn had success in the past, she did not change much of the content on my resume, but we did bring my skills and experience up to the top, and put my education at the bottom.

They also formatted it to fit on one page. It’s starting to get longer, but I’m a believer in the one page resume.I also made sure to include a cover letter with my application. This gave me the opportunity to explicitly call out that my qualifications are a great match with their job description. It’s much more clear than having to read through my resume for buzzwords.I kept a spreadsheet with all of the companies I applied to. In this spreadsheet I’d put information like the company name, date that I completed the application, if I had heard back, the last update, if I had sent a thank you, the name of the hiring manager, etc.This helped me keep track of all the different things I had in flight, and if there was anything I could be doing on my side to keep the process moving.

Each Application:

For each job I applied to, I would then start a little hunt on LinkedIn. I’d look to see if anyone in my network currently worked for the company. If so, they’d probably like to know that I’m applying, because a lot of companies offer referral bonuses. I’d message the person and say something like:Hey Michelle,I’m applying for the Data Scientist position at ______________. Any chance you’d be willing to refer me?

If there is no one in my network that works for the company, I then try and find the hiring manager for the position. Odds are it was going to be a title like “Director (or VP) of Data Science and Analytics”, or some variation, you’re trying to find someone who is a decision maker.This requires LinkedIn Premium, because I’m about to send an InMail. My message to a hiring manager/decision maker would look something like: