Object Detection Using YOLOv5 Tutorial – Part 3

Very exciting, you're made it to the 3rd and final part of bus detection with YOLOv5 tutorial. If you haven't been following along this whole time; you can read about the camera setup, data collection and annotation process here. The 2nd part of the tutorial focused on getting the data out of Roboflow, creating a CometML data artifact and training the model. That 2rd article is here.

In this article, we're going to take the trained model and actually start doing live detection. Once we detect the bus we'll recieve a text. Here's the steps we're going to go through to set that up:

Choose the best training run

Run live detection

Send a text using AWS

Before we get started, if you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I used: Python Course.

Choosing the best training run:

Here we're obviously going to be using Comet. For this project, there were a couple considerations. When I started with too few images there was more value in picking the right model, currently it looks like any of my models trained on the larger set of images would work just fine. Basically, I wanted to minimize false positives, I definitely did not want a text when a neighbor was driving by, because these texts are going to my phone everyday, and that would be annoying. Similarly, it's not a big deal if my model misses classifying a couple frames of the bus, as long as it's catching the bus driving past my house consistently (which consists of a number of frames while driving by my house). We want our precision to be very close to 1.

Run live detection:

It's showtime, folks! For the detection, I forked the YOLOv5 detect script. A link to my copy is here. I need to be able to run my own python code each time the model detects the schoolbus. There are many convenient output formats provided by the yolov5 detect.py script, but for this project I decided to add an additional parameter to the script called "on_objects_detected". This parameter is a reference to a function, and I altered detect.py to call the function whenever it detects objects in the stream. When it calls the function, it also provides a list of detected objects and the annotated image. With this in place, I can define my own function which sends a text message alert and pass that function name to the yolov5 detect script in order to connect the model to my AWS notification code. You can 'CRTL + F' my name 'Kristen' to see the places where I added lines of code and comments.

Sending a text alert:

This was actually my first time using AWS, I had to set up a new account. This Medium article explains how you can set up an AWS account (but not the Go SDK part, I know nothing about Go), but I then used the boto3 library to send the sms.

import os

os.environ['AWS_SHARED_CREDENTIALS_FILE'] = '.aws_credentials'

import boto3

def test_aws_access() -> bool:

"""

We only try to use aws on detection, so I call this on startup of detect_bus.py to make sure credentials

are working and everything. I got sick of having the AWS code fail hours after starting up detect_bus.py...

I googled how to check if boto3 is authenticated, and found this:

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/sts.html#STS.Client.get_caller_identity

"""

try:

resp = boto3.client('sts').get_caller_identity()

print(f'AWS credentials working.')

return True

except Exception as e:

print(f'Failed to validate AWS authentication: {e}')

return False

def send_sms(msg):

boto3.client('sns').publish(

TopicArn='arn:aws:sns:us-east-1:916437080264:detect_bus',

Message=msg,

Subject='bus detector',

MessageStructure='string')

def save_file(file_path, content_type='image/jpeg'):

"""Save a file to our s3 bucket (file storage in AWS) because we wanted to include an image in the text"""

client = boto3.client('s3')

client.upload_file(file_path, 'bus-detector', file_path,

ExtraArgs={'ACL': 'public-read', 'ContentType': content_type})

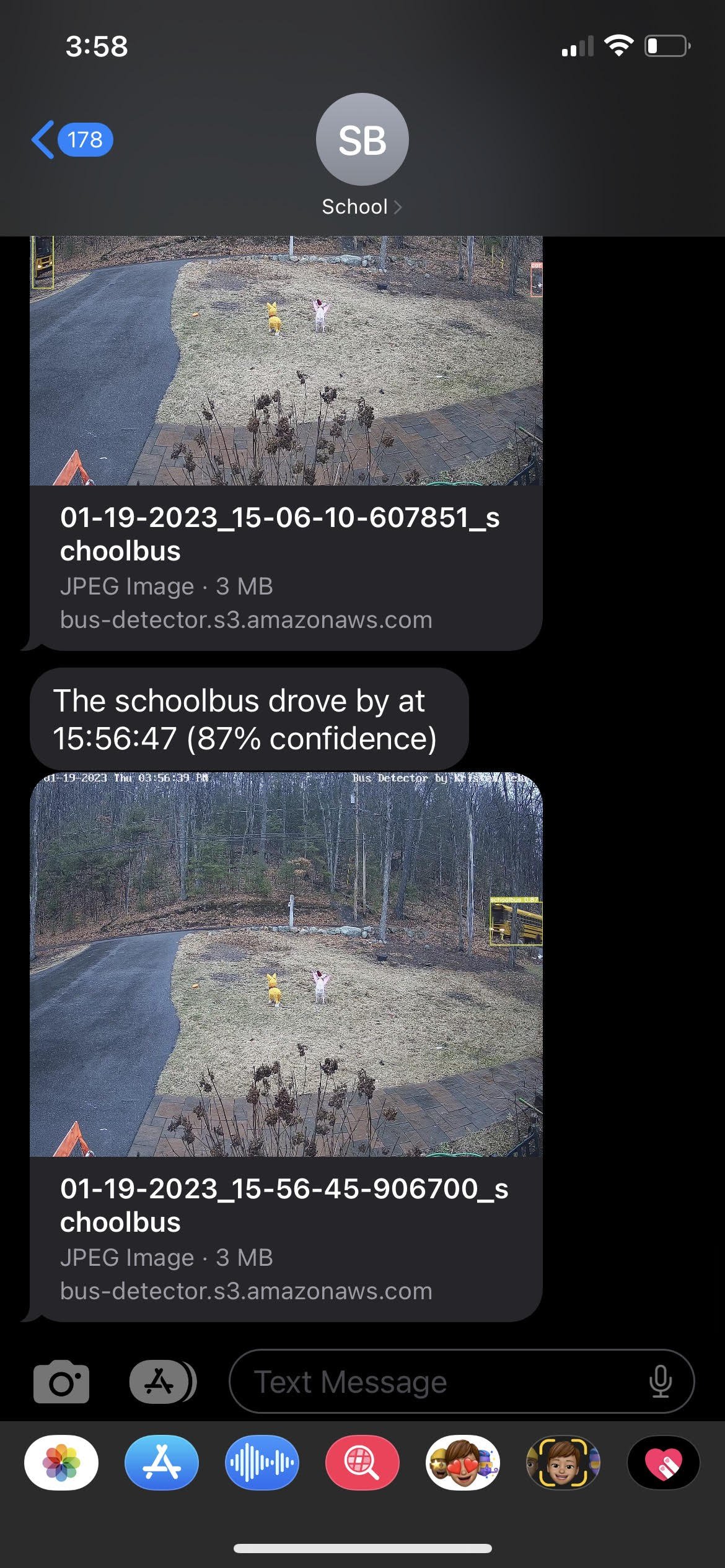

return f'https://bus-detector.s3.amazonaws.com/{file_path}'Since we're passing the photo here, you'll get to see the detected picture in the text you receive (below). I went out of my way to add this because I wanted to see what was detected. If it was not a picture of a bus for some reason, I'd like to know what it was actually detecting. Having this information could help inform what type of training data I should add if it wasn't working well.

I also added logic so that I was only notified of the bus once every minute, I certainly don't need a text for each frame of the bus in front of my house. Luckily, it's been working very well. I haven't missed a bus. I have had a couple false positives, but they haven't been in the morning and it's a rare issue.

In order to be allowed to send text messages through AWS SNS in the US, I'm required to have a toll-free number which is registered and verified (AWS docs). Luckily, AWS can provide me with a toll-free number of my own for $2/month. I then used the AWS console to complete the simple TFN registration process where I described the bus detector application and how only my family would be receiving messages from the number (AWS wants to make sure you're not spamming phone numbers).

Getting a .csv of the data:

Although this wasn't part of the intended use case, I'd like to put the bus data over time into a .csv so that I could make a dashboard (really I'm thinking about future projects here, it's not necessary for this project). I've started looking at plots of my data to understand the average time that the bus comes for each of it's passes by my house. I'm starting to see how I could potentially use computer vision and text alerts for other use cases where this data might be more relevant.

import pytz

import boto3

import os

os.environ['AWS_SHARED_CREDENTIALS_FILE'] = '.aws_credentials'

resp = boto3.client('s3').list_objects_v2(

Bucket='bus-detector',

Prefix='images/'

)

def get_row_from_s3_img(img):

local = img['LastModified'].astimezone(pytz.timezone('America/New_York'))

return {

'timestamp': local.isoformat(),

'img_url': f'https://bus-detector.s3.amazonaws.com/{img["Key"]}',

'class': img['Key'].split('_')[-1].split('.')[0]

}

images = resp['Contents']

images.sort(reverse=True, key=lambda e: e['LastModified'])

rows = list(map(get_row_from_s3_img, images))

lines = ['timestamp,image_url,class']

for row in rows:

lines.append(f'{row["timestamp"]},{row["img_url"]},{row["class"]}')

file = open('data.csv', 'w')

file.write('n'.join(lines) + 'n')Summary:

Well that's it folks. You've been with me on a journey through my computer vision project to detect the school bus. I hope something here can be applied in your own project. In this article we actually ran the detection and set up text alerts, super cool. Through going through this exercise, I can see a number of other ways I could make my life easier using similar technology. Again, the first article about camera setup, data collection, and annotation in Roboflow is here. The 2nd part of the tutorial focused on downloading the data, creating a CometML data artifact and training the model here.

If you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I would recommend: Python Course.