Why Data Scientists Can Make Great Algorithmic Traders - Interview with Jason Strimpel

**Disclaimer: Quant Science is not a registered investment adviser under the Investment Advisers Act or a commodity trading advisor under the Commodity Exchange Act. The information provided is for educational and informational purposes only and does not constitute investment, financial, or trading advice.

**Disclosure: This post contains affiliate links. As an affiliate of Quant Science, I may receive a commission if you sign up or make a purchase using my links, at no additional cost to you. All opinions expressed are my own and based on my personal experience.

What if the skills you’ve been honing in data science gave you a head start in taking up algorithmic trading? I was so lucky to have the opportunity to speak with Jason Strimpel, Founder of PyQuant News and Co-founder of Quant Science. He has been in trading and technology for 25 years. He was a hedge fund trader, risk quant, machine learning engineering manager, and GenAI specialist at AWS. He is now the Managing Director of AI and Advanced Analytics at a major consulting company.

Since he’s well-versed in both areas, Jason was the perfect person to ask about the intersection of data science and algorithmic trading. This article is a recap of the conversation. I asked questions, he shared insights, and I’ll sprinkle in a few of my own reflections along the way. All my comments will have my name in front of them. Otherwise, the words are either direct quotes from Jason or a paraphrase.

Jason gave his thoughts on:

Which data science skills actually transfer into trading (hint: it’s not just the technical stuff)

Why building a trading strategy feels a lot like building a machine learning model

The messy reality of financial market data (it’s definitely not your textbook dataset)

Pitfalls like overfitting and survivorship bias that trip up even smart quants

The mindset shift data scientists need to survive the markets

Jason’s go-to tools if you want to dip your toes into trading today

Transferable Skills: From Messy Data to Messy Markets. “What are the immediately transferable skills for a data scientist considering algorithmic trading?”

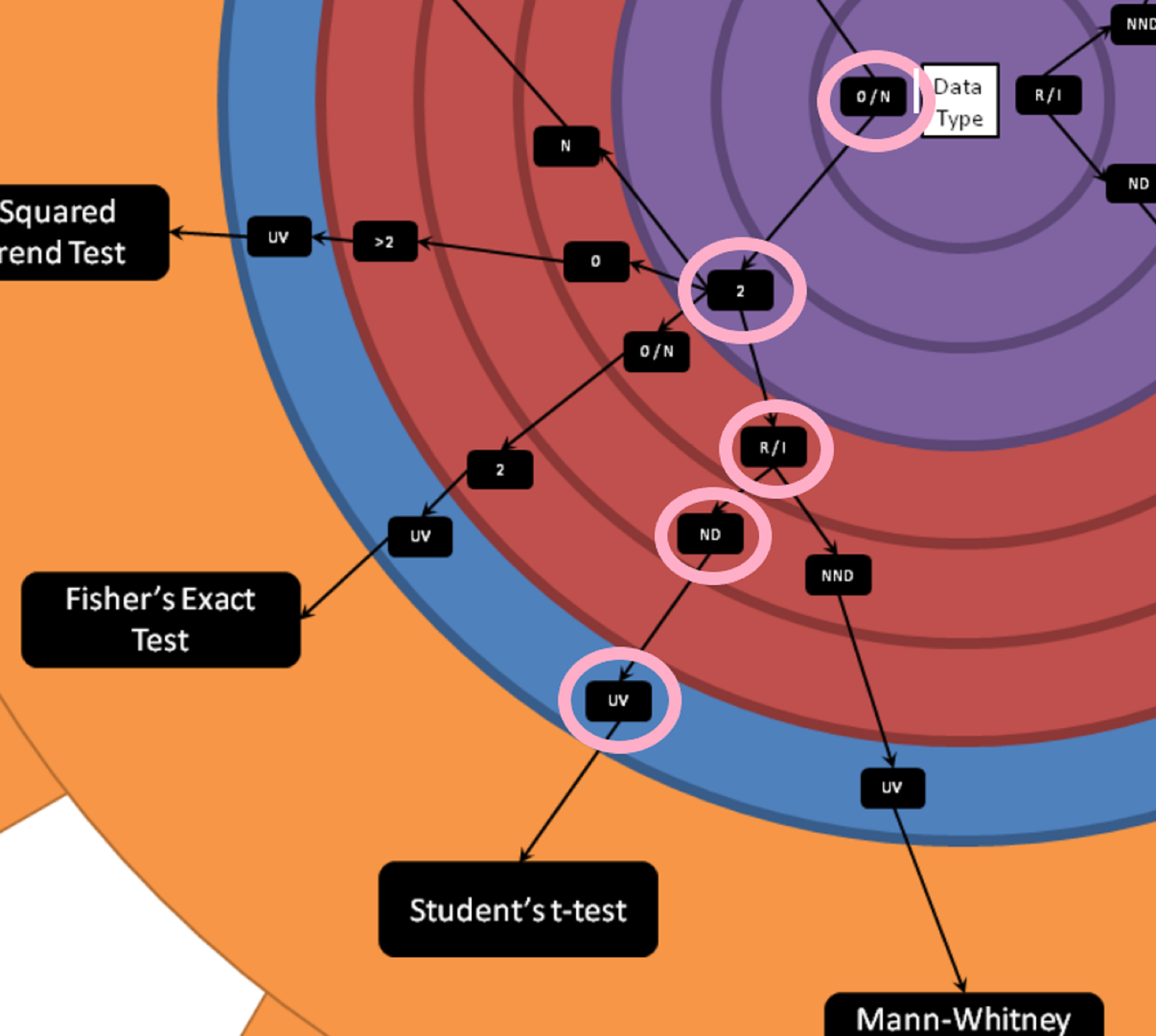

Jason quickly mentioned that, of course, there’s the technical side. You already know how to work with tools and data, and for the stock market, that’s a good start. Often, there are libraries now for everything, so you’ll be able to find your way to results. But then there’s “how do you interpret those results in the context or domain?” And this skill that we’ve honed as data scientists, having the discipline and rigor, is very transferable. He noted that data science is directed more towards statistical analysis, while quantitative finance is typically thought of more as stochastic calculus, so the underlying concepts are a little different, but he believes it’s something that data scientists can pick up. But having the analytics framework mentally is important. Then the more philosophical part is “how do you make that theory come to life?” It’s similar to probability, because you’re thinking in terms of bets, you need to think of it in terms of a distribution.

Trading Strategies: Similar Science, Different Playground. How is building a trading strategy like building machine learning models?

Jason shared that although we’re not always strictly following the scientific process in either domain, it’s similar; you form a hypothesis, you collect data, you test your hypothesis, and then you draw conclusions. In trading, the P&L starts to feel like just another diagnostic.

“If I’m losing money, not great, but ok, it’s just another data point telling me something.”

Kristen - My take (so far) is that I was pumped about the amount of overlap. My current strategy (leveraging code from the Quant Science course) uses xgboost, so although there is much to learn, the overlap between data science and algorithmic trading is large. It’s the full pipeline, but with huge opportunities to learn, iterate, build, and track. It’s a project that I can really sink my teeth into.

Overfitting: The Trap You’ll Fall Into (and How to Spot It). “What does data cleaning and feature engineering look like for algorithmic trading?”

Of course, Jason made the quip about how in academic programs, you receive beautifully clean data sets. And in both data science and stock market data, that is never the case, but stock data has the complexity of also being non-stationary. This breaks a lot of the assumptions required for traditional time series modeling. He discussed trade-offs, noting that people will try to normalize prices so that you’re looking at returns, but then you lose all the memory. (Using returns, you’re working with less granular information.) Another issue was data cleaning type problems, like when Facebook changed its name to Meta (changing the ticker symbol in the data). Or the problem of “survivorship bias”.

Survivorship bias = drawing conclusions from the visible winners, while overlooking the invisible losers.

For survivorship bias, the example Jason gave was Enron. If you had “looked at the market 20 years ago, you would have included Enron and been wrong”. For those unfamiliar with Enron, it was once one of the largest U.S. companies, celebrated for its innovation, before collapsing in 2001 due to massive accounting fraud. It’s also possible to conduct a backtest that shows 25% returns, but when you trade it live, your actual performance is only half that.

Kristen - Although I’m sure we’ve all put a model out in the wild that we thought would perform better than what actually happened in reality. I’m sure it’s worse in trading, but I might consider that one something that the two disciplines have in common.

Overfitting is another big problem, and it’s especially relevant here because there is so little signal given the nature of the data. Jason said you will “overfit regularly and be fooled into thinking you actually have something that is working.” And sometimes, you can even get lucky, and you think that you’re winning because you had a winner, until you stop being so lucky.

When Your Model Looks Too Good to Be True: “How do you know you’re overfitting?”

“We do things like testing for parameter robustness. So if you change your input parameter by a little bit, and your output swings a lot (meaning your outputs are very sensitive to your inputs), that’s a red flag. I typically perform one-sided t-tests to determine if there’s statistical significance after running a series of simulations. We also use what’s called the information coefficient, and I also avoid the classes of strategies that are more prone to overfitting. In factor-based strategies, there is less opportunity to overfit.”

Kristen - In the data science world, I’ve definitely had to do checks for the robustness of results and parameters, but the information coefficient was new to me, and I’ve heard Jason share before about how he uses this one to evaluate backtests. One of my next articles will be a deep dive into understanding and interpreting the information coefficient, so be on the lookout for that! (Maybe consider joining my email list).

From Loss Functions to Market Chaos: The Mindset Shift. “What type of mindset shift do data scientists need to make when they first get into algorithmic trading?”

There’s beauty in math, statistics, and analyzing data. But financial markets are messy. You will spend time on all of this and then watch it blow up or fail very quickly.

People in general have a lot of ego when it comes to money. The quicker you can separate that, the better.

You have to iterate very, very quickly. So, having templates and infrastructure in place, you’ll find that something works faster.

You have to like the journey, because you will be repeating the same thing over and over.

There’s a mental model of being a little more scrappy, maybe your code isn’t beautiful, maybe you’re less pristine with your analysis.

In ML, you’re often looking at the loss function, maybe thinking about the trade-off between type I and type II error, but with these models, you’re considering so many more metrics. You’ll need to learn a whole new suite of metrics, Sharpe, Calmar, Sortino, etc.

Sharpe Ratio - A higher Sharpe ratio means you’re earning more return for each unit of risk you take. A Sharpe > 1 is usually considered “good,” > 2 “very good.” It measures risk-adjusted return by comparing your returns (above what you’d earn in something essentially “risk-free,” like U.S. Treasury bills) to how volatile your portfolio is.

Calmar Ratio - Good for strategies with asymmetric risk, because it directly penalizes strategies with deep drawdowns (even if volatility looks low). Traders often like this better than Sharpe if they care more about capital preservation. Focuses on return relative to drawdowns instead of volatility. It measures annualized return divided by maximum drawdown (the worst peak-to-trough decline).

Sortino Ratio - A higher Sortino means better risk-adjusted returns where “risk” is defined as harmful volatility. It’s often more intuitive than Sharpe because investors generally don’t mind upside surprises. This is a refinement of Sharpe that only considers downside volatility (bad volatility), ignoring upside swings.

This part of the interview was made into a YouTube clip, which you can check out here.

“The failure I most often see people making is not having a strategy that’s linked to the economic reality. And just thinking that they can brute force optimize some input parameters as generalizable into the future.”

“What tools would you suggest if someone wanted to get started today?”

Pandas or Polars (or dplyr if you’re an R person).

YFinance for data

OpenBB is also for data, but has a broader range.

Get familiar with the underlying data, “prove to yourself that the distributions are not stationary. Prove to yourselves that normalizing destroys memory.”

Then start working with a backtesting framework like VectorBT. This is a great backtesting engine that will get you started very quickly. Then review some of the performance metrics I’ve mentioned.

Jason also shares all sorts of actionable tips on his PyQuant News, or he also has a course “Getting Started With Python for Quant Finance”.

“Will AI make the quant role more accessible to data scientists, or do you think it’s going to make it competitive in a way that is prohibitive?”

I think it’ll separate the big winners, who are already winning from everyone else. And data scientists just getting started are included in the “everybody else”. The big money managers and institutions will be able to leverage so much data at scale in a way that we can’t. At the same time, there are so many retail investors that are only leveraging price data, and it’s becoming easier for us to feature engineer interesting features on unstructured data in a way that would have been more difficult a couple of years ago.

Kristen - To paraphrase, my takeaway was that it’s now easier than ever for us to leverage market data, fundamental data, and other alternative data in our strategies. That already gives us an edge against most retail traders, who only look at price. At the same time, we shouldn’t kid ourselves; we won’t be competing head-to-head with the big institutions that use the same data at a massive scale.

Summary:

If there’s one big takeaway from my chat with Jason, it’s this: the jump from data science to quant trading isn’t as huge as it looks, but the markets aren’t going to play nice. The same habits that make you a good data scientist still apply: bring your rigor, test ideas like a scientist, keep an eye out for patterns that won’t hold up in the real world, and don’t get fooled by overfitting. Start simple, move fast, and remember that P&L is just feedback.

Honestly, I was so pumped to get the chance to pick Jason’s brain on this, he’s been at the intersection of data and markets for years, and it was a rare opportunity to learn directly from someone who’s lived both sides.

I’ll be doing a deeper dive soon on the information coefficient, so keep an eye out if that’s up your alley. And if you want to explore further, definitely check out Jason’s articles at PyQuant News and the Quant Science course we talked about.

**The content in this article is for informational and educational purposes only. It is not intended as financial, investment, or trading advice. All strategies and opinions expressed are those of the author and do not constitute recommendations to buy, sell, or hold any financial instruments. Trading and investing involve risk, and you should conduct your own research or consult a qualified financial advisor before making any investment decisions. ** Hypothetical or simulated performance results have inherent limitations and do not represent actual trading. No representation is being made that any account will or is likely to achieve profits or losses similar to those shown. Quant Science is not a registered investment adviser or commodity trading advisor, and nothing herein should be construed as personalized advice. **

The Moment My Trading Went From Demo to Professional (Quant Science Level 2 Review)

**Disclaimer: Quant Science is not a registered investment adviser under the Investment Advisers Act or a commodity trading advisor under the Commodity Exchange Act. The information provided is for educational and informational purposes only and does not constitute investment, financial, or trading advice.

**Disclosure: This post contains affiliate links. As an affiliate of Quant Science, I may receive a commission if you sign up or make a purchase using my links, at no additional cost to you. All opinions expressed are my own and based on my personal experience.

I just finished the Quant Science program, and now I’m testing strategies end-to-end. This is exactly where I wanted to get to, and I can’t imagine how long it would’ve taken me on my own. The Level 2 course is where things feel less like a guided demo, and you move to a professional automated trading workflow. We transitioned from notebooks to scripts, integrating new tools such as Quant Science’s qsconnect, qsresearch, and qsautomate, and also started using Prefect and MLflow in our process.

Here’s what I’ll cover in this article:

What changes in Level 2 compared to Level 1

Getting and managing a larger dataset with FMP

Screening the universe with qsconnect

Combining value and momentum factors in strategies with qsresearch

Using MLflow to track and compare backtests

Orchestrating and deploying strategies

My thoughts so far

What Changes in Level 2

In Level 1, we mostly worked with a smaller universe and jupyter notebooks. Strategies like momentum, risk parity, and crack spread were introduced with all the heavy lifting already built in and it was great to be able to start playing immediately after class. If you’re interested in reading more about Level 1, I’ve shared an article here.

In Level 2, we’re still given the code, but you’re building out something much more professional feeling:

We’re pulling raw data from Financial Modeling Prep (FMP) instead of working with yfinance. FMP is more robust, with cleaner, broader coverage and an official API. As part of the course, Jason and Matt at Quant Science have worked out a discount with FMP, and I’m currently paying $30/month.

You’re screening a larger universe (leveraging that FMP data), often thousands of tickers, and we also start incorporating fundamental data in our strategy. The bigger volume of data gives you the ability to prove your strategy works across diverse market conditions and sectors, increases your sample size for backtesting, helps you create better factors, reduces survivorship bias, and gives you more ability to find better stock candidates for trading.

The workflow shifts from notebook experiments to modular Python scripts. Throughout the course, we’re moving from tinkering to automation.

Backtesting becomes more rigorous, and MLflow becomes a central tool for tracking, organizing, and comparing results. I’ve always loved experiment tracking, and I wrote another article about how the course is using MLFlow (even when there’s no ML involved) and the metrics we’re tracking. You can find that article here.

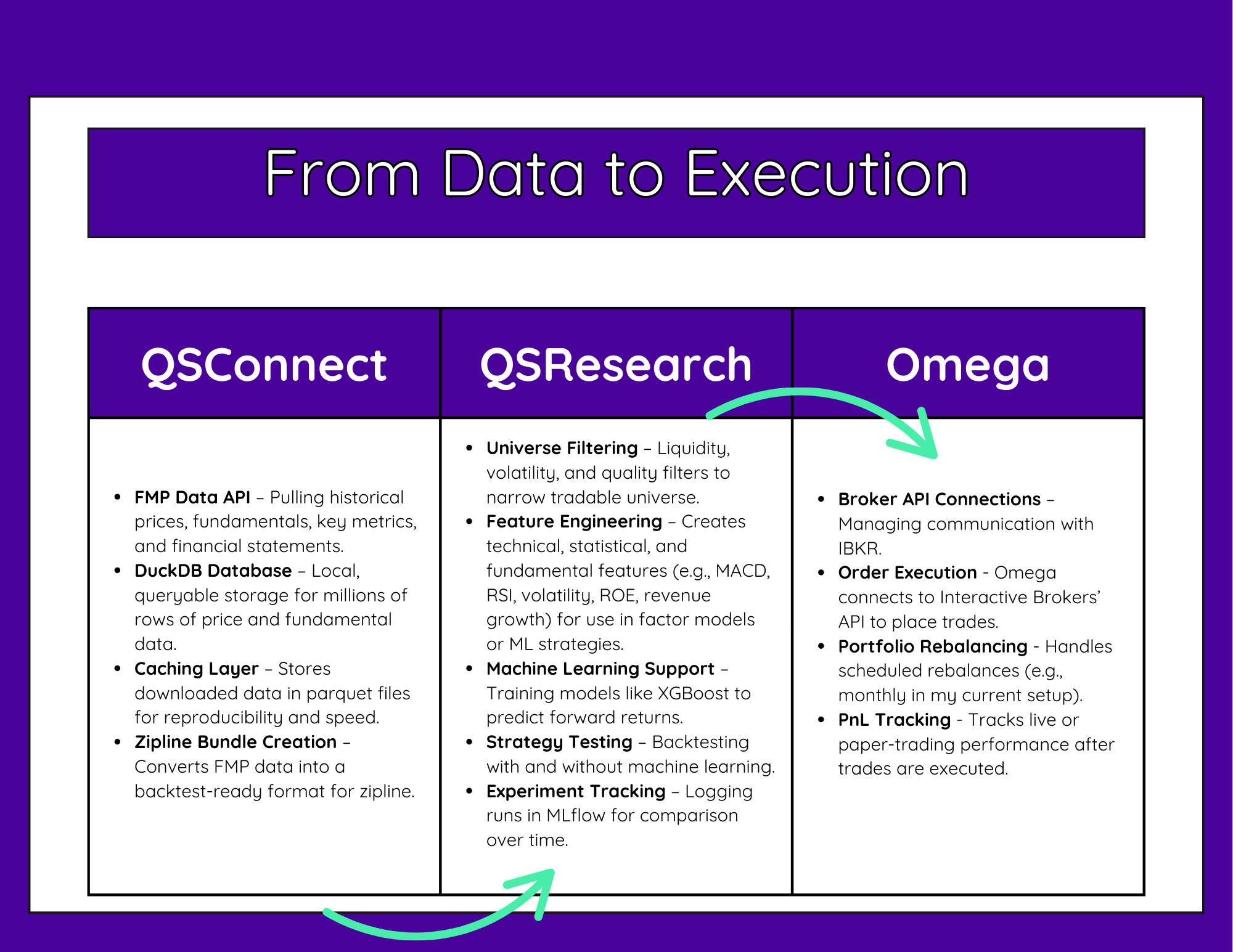

It feels like moving from that cool POC you worked on for a couple of weeks to being ready to put a real model in production. And it is. These are the Quant Science libraries we’re working with. In the last class, we were introduced to qsautomate, which orchestrates the whole pipeline.

From Data to Execution diagram showing QSConnect (FMP/DuckDB ingest), QSResearch (feature engineering & MLflow backtests), and Omega (IBKR execution, rebalancing, P&L).

Attend the Next Special Training Event

In this webinar, you’ll hear all about what it takes to manage and start working with an end-to-end algo trading pipeline.

What: Algorithmic Trading for Data Scientists

How It Will Help You: Learn how the Quant Science course would teach you how to build, backtest, and track systematic trading strategies using tools like Python, Zipline, and MLflow, so you can confidently experiment, refine, and grow as a trader.

Price: Free

How To Join: Register Here

Getting and Managing a Larger Dataset with FMP

In Level 2, data access scales up. Instead of small hand-picked samples, I’m screening across thousands of stocks. This gives me:

Historical prices

Fundamental data (book value, P/E ratios, etc.)

Financial statement metrics for factor modeling

And of course, you’ll have your ETF benchmarks to compare against

The first task is setting up qsconnect to pull FMP data into a DuckDB database. This matters because:

You can store years of historical data locally

Queries are fast (DuckDB is optimized for analytics)

You avoid API throttling by working from your own database

It’s the first time I’ve had such a clean, flexible setup for pulling and storing this much data for personal use, regardless of what type of data project I’m working on. qsconnect handles data ingestion, caching, and access. For example, we might pull all stocks above a certain market cap and average volume, and then create a zipline bundle that can be reused as we test various strategies..

The big thing for me was having a system. For years, when I’ve tried my hand at algorithmic trading, there were an overwhelming number of libraries, and I come from a non-finance background. I was very unsure of my needs and what packages I should be considering (or not considering). Since much of the content online is sponsored, and you’re often not seeing an end-to-end pipeline, I couldn’t get an idea of the big picture that would allow me to put everything together in a way that would allow me to feel confident.

Combining Value and Momentum Factors with qsresearch

This was easily my favorite part of Level 2.

In Level 1, momentum was the clear winner among the three strategies during my testing. (More about Level 1 is in a previous article here.) In Level 2, we don’t just run momentum on its own, we combine it with value features and other fundamentals, then we use XGBoost to build a predictive factor model.

Instead of a simple two-factor rank, the pipeline in this clinic pulls together:

Technical momentum

Fundamental strength

Specialty growth-value blends

The workflow looks like this:

Preprocess price data from our FMP database and add technical indicators.

Join fundamentals from multiple FMP tables.

Screen the universe with liquidity, volatility, and quality filters.

Engineer composite factors

Train XGBoost on historical data to predict which stocks are most likely to outperform for various look-forward periods.

Construct the portfolio for analysis

Running this hybrid model in backtests produced a noticeably different risk/return profile than pure momentum. I had read the book “Quantitative Momentum” that was recommended during the course and learned about combining momentum and value strategies. In the course, they’re incorporating a momentum score from the book along with the momentum calculation they shared in Level 1. Basically, we’re using momentum’s ability to capture trends, paired with value and quality filters to avoid overpriced or fundamentally weak stocks. I’m sure I’ll investigate other strategies on my own later, but for now I plan on testing and iterating with this one.

Tracking Backtests with MLflow

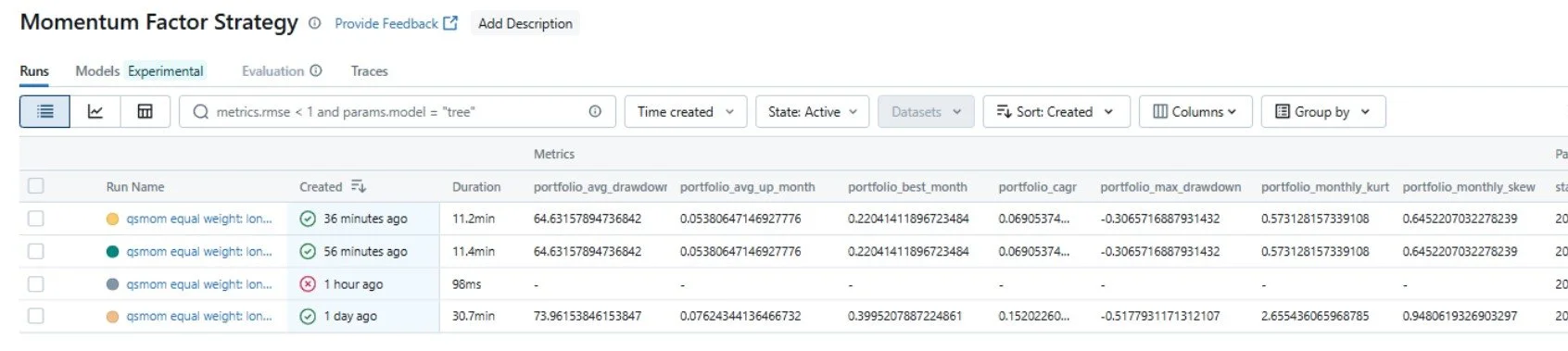

I’ve already written an article about this, but one of the best time savers for comparison and analysis is that Level 2 utilizes MLflow in the backtesting process.

Every time you run a backtest, MLflow automatically logs:

The parameters used (factor lookbacks, universe size, etc.)

Key performance metrics (Sharpe, Sortino, max drawdown)

Strategy code version

Plots and analysis files

AlphaLens and Pyfolio tear sheets

This means I can go back months later and instantly compare against my previous runs and anything else I’ve tried along the way.

Without MLflow, I’d be digging through random folders and filenames trying to remember which run was which

Orchestrating and Deploying Strategies

The final session, Class 8, dives into advanced strategy orchestration and deployment. Essentially, this is the class where all the individual pieces we’ve been building come together into a cohesive, automated system. We covered how to run multiple strategies in parallel, go end-to-end through the pipeline, and keep everything production-ready.

I’m excited because this is the bridge between research mode and a real, running trading operation. I just watched this live class today and I am so excited to start playing with it. I’m hoping that I’ll be able to start spending less time on code (although, there was time necessary here to really learn the different pieces), and more time designing and backtesting new strategies. This is the part where my workflow shifts from just building to actively managing and iterating on a portfolio of models in a controlled, repeatable way.

Prefect workflow timeline showing FMP fundamentals/price downloads, a Zipline bundle build, and many parallel backtest runs.

My Thoughts So Far

Level 2 gave me what I was looking for: At the end of the course, I was ready to start backtesting and trading strategies with a system that I feel is rigorous. This is the most organized my trading research has ever been.

I’m still paper trading for now, but with these tools in place, I finally feel like I have the infrastructure to iterate intelligently and get further than before.

If you’re considering joining, my affiliate link below gets you $1,000 off the course.

Quant Science Program – $1,000 Off

To attend the next free live webinar to hear more about the course, you can do that here.

If you want to follow along with my future articles, join my email list. I’m currently only sending updates about algorithmic trading and this journey.

**The content in this article is for informational and educational purposes only. It is not intended as financial, investment, or trading advice. All strategies and opinions expressed are those of the author and do not constitute recommendations to buy, sell, or hold any financial instruments. Trading and investing involve risk, and you should conduct your own research or consult a qualified financial advisor before making any investment decisions. ** Hypothetical or simulated performance results have inherent limitations and do not represent actual trading. No representation is being made that any account will or is likely to achieve profits or losses similar to those shown. Quant Science is not a registered investment adviser or commodity trading advisor, and nothing herein should be construed as personalized advice. **

Why You Should Be Using MLflow to Track Backtests for Algo Trading (Even Without Machine Learning)

** Disclosure: This post contains affiliate links. As an affiliate of Quant Science, I may receive a commission if you sign up or make a purchase using my links, at no additional cost to you. All opinions expressed are my own and based on my personal experience. **

** Quant Science is not a registered investment adviser under the Investment Advisers Act or a commodity trading advisor under the Commodity Exchange Act. The information provided is for educational and informational purposes only and does not constitute investment, financial, or trading advice. **

As a data scientist, I’ve spent a lot of time working on machine learning models, and I’ve used tools like MLflow to track hyperparameters, performance metrics, and experiment versions so that I can compare runs and stay organized. I’ve even co-authored a book on using MLOps when building LLM-powered apps using Comet (another experiment tracking tool), published with Wiley in 2024. I’m a bigger fan of experiment tracking than many. But what we’re doing in the Quant Science course opened my eyes to another extra exciting use of experiment tracking: backtesting trading strategies.

Machine learning strategies for trading are covered in the Quant Science course, but we’re tracking our runs even when testing a strategy that does not involve machine learning.

Quant Trading Is One Giant Experiment

When you’re building systematic trading strategies, whether momentum, risk parity, or any other, every change you make is a hypothesis that you’re testing. And I couldn’t imagine keeping track of this without a tracking tool. Think about all of the potential variations:

Switch your universe from tech stocks to value stocks?

Adjusting your long/short thresholds (e.g., top 10% vs top 20%)

Test adding a stop-loss or trailing stop to your positions

Apply z-score normalization to your factor

Add a second factor (e.g., combine momentum with value features)

Each tweak is an opportunity to compare performance. And unless you have a reliable way to track and compare results, it’s really easy to:

Lose track of what you’ve already tried

Forget which past runs performed well

Waste time making comparisons when the data could’ve been at your fingertips

This is exactly why Quant Science is using MLflow, even for the non- machine learning strategies.

Attend the Next Quant Science Webinar

In this webinar, you’ll hear all about what it takes to manage and start working with an end-to-end algo trading pipeline.

What: Algorithmic Trading for Data Scientists

How It Will Help You: Learn how the Quant Science course would teach you how to build, backtest, and track systematic trading strategies using tools like Python, Zipline, and MLflow, so you can confidently experiment, refine, and grow as a trader.

Price: Free

How

To Join: Register Here

So, How Are We Using MLflow?

After running a strategy, I’m looking at the performance of this strategy compared to a benchmark, and I’m comparing it to previous strategies that I’ve tried. Matt Dancho built this out so that all of the parameters and tear sheet output are stored, and MLFlow logs and keeps track of the output.

Although I love the tear sheets from AlphaLens and Pyfolio, they don’t give me the ability to look across runs. For each backtest, Matt set up MLflow to track a rich set of performance metrics that actually help me decide whether a strategy is viable (and better than previous runs):

Total Return

CAGR (Compounded Annual Growth Rate)

Sharpe, Sortino, and Calmar Ratios

Max Drawdown and Average Drawdown

Win Rate by Year or Rolling Period

Twelve-month return and volatility

Best and worst day/month/year performance

We’re also logging the exact configuration of the strategy:

The momentum lookback windows (e.g., 21, 252, 126)

Universe filters (e.g., min market cap, price > $10)

Whether the strategy includes short positions

Rebalance frequency

Portfolio construction method

Quant Science built their QSResearch library with a configuration-first approach, meaning that nearly every parameter for the backtest, from rebalance frequency to factor lookbacks, to universe filters, is specified in a single CONFIG dictionary.

That setup makes it really easy for MLflow to automatically log every parameter in a structured way. So even when I'm not using a machine learning model, MLflow still captures all the relevant strategy inputs.

This view in MLflow shows the parameters that were passed into my backtest config. Everything from the bundle I used, the starting capital for the backtest, the rebalance schedule, and the exact filters and settings for screening, preprocessing, and factor construction. It’s all captured automatically:

Next, is one of my favorite parts. The MLflow dashboard shows a side-by-side comparison of all my recent backtest runs. I can instantly compare by metrics like Sharpe ratio, drawdown, or monthly return to see which versions of the strategy performed best. It makes it super easy to spot what’s working, what’s not, and which run is worth digging deeper into. It’s easy to get visibility into every experiment.

These aren’t great strategies, I’ve just started, and some of them have high drawdowns, but that’s the point of tracking. I can quickly compare results, spot tradeoffs, and iterate toward better performance. This dashboard makes my strategy development feel like an actual research process.

I can dig deep into the performance compared to a benchmark. Here, the benchmark is AAPL, so my portfolio got crushed, but at least I’m not trying to oversell myself over here.

The AlphaLens and Pyfolio tearsheets are easy to find, and they’re not in some poorly named file on my desktop, they’re stored with the experiment run.

Aphalens:

Pyfolio:

And all of this is tracked automatically. I’m not an organized person by nature. I’m not the type of person who is going to save all of my AlphaLens tear sheets in a neat folder with a naming convention that gives me useful context. But now I don’t need to! It’s all right here. The experiments are also persistent in MLFlow. I can access them even if my local output files get deleted.

How It’s Different from ML Tracking

Historically, I’ve always used experiment tracking to store the model type, hyperparameters, and training/validation accuracy metrics. Instead, we’re treating the backtest as the experiment, and storing all of the associated metrics with a given look-forward period, and comparing to a benchmark.

It's the same principle, but applied to a domain where reproducibility, auditability, and comparison are just as important.

It Makes Me Smarter, Faster

MLflow has become my second brain in this process.

Instead of:

“Wait, didn’t I already test that?”

I can just look it up.

Instead of:

“That strategy looked promising, but I can’t remember what threshold I used.”

I have it logged.

I can focus on analyzing the data, rather than trying to aggregate it. I might’ve felt overwhelmed (or missed important pieces) otherwise.

Final Thoughts

Honestly, I’m having a ridiculous amount of fun with this.

I came into the Quant Science program with a strong data background, but this kind of structured experimentation with financial strategies instead of machine learning models is just so satisfying. It feels like a playground for data nerds, but with actual portfolio implications. This week, I will be spending a lot of time backtesting with ML, but I wanted to share that I’m enjoying experiment tracking even without it.

What I love most is how this whole setup takes away the busywork. I’m not manually screenshotting backtests or trying to remember which CSV matched which strategy. It’s all being logged for me, and that lets me focus more on the strategy.

There’s so much more to test, compare, and analyze.

If you’re curious about the Quant Science program, check out their next webinar here.

**The content in this article is for informational and educational purposes only. It is not intended as financial, investment, or trading advice. All strategies and opinions expressed are those of the author and do not constitute recommendations to buy, sell, or hold any financial instruments. Trading and investing involve risk, and you should conduct your own research or consult a qualified financial advisor before making any investment decisions. ** Hypothetical or simulated performance results have inherent limitations and do not represent actual trading. No representation is being made that any account will or is likely to achieve profits or losses similar to those shown. Quant Science is not a registered investment adviser or commodity trading advisor, and nothing herein should be construed as personalized advice. **

A Data Scientist’s Journey into Algorithmic Trading with Quant Science

** Disclosure: This post contains affiliate links. As an affiliate of Quant Science, I may receive a commission if you sign up or make a purchase using my links, at no additional cost to you. All opinions expressed are my own and based on my personal experience. **

** Quant Science is not a registered investment adviser under the Investment Advisers Act or a commodity trading advisor under the Commodity Exchange Act. The information provided is for educational and informational purposes only and does not constitute investment, financial, or trading advice. **

As a data scientist, I’ve spent years working with data, mostly for business use cases like customer churn, behavioral segmentation, or creating dashboards. But for a very long time (or at least since 2007), I’ve been itching to actually make algorithmic trading work for me. It has always just felt like that side project that could marry data, potentially a return on my investments, and wouldn’t require scheduling any meetings.

My attempts have never yielded results better than neutral, and I’ve always hesitated to invest in trading courses (of any variety) because it’s hard to know whether someone is legit or if they just make their money from selling courses. Then Matt Dancho (Founder of Business Science) launched a course with Jason Strimpel. I’ve known Matt since 2018, I traveled with him to R Conf one year ago, and I had already taken 4 of his Business Science courses. I was so excited that he was building this program, because I’ve been dying to learn, but wasn’t willing to invest anything more than Coursera money with anyone else. To level set, Jason is the one who has actually taught the majority of the live instruction in this course; he has over 20 years of experience trading.

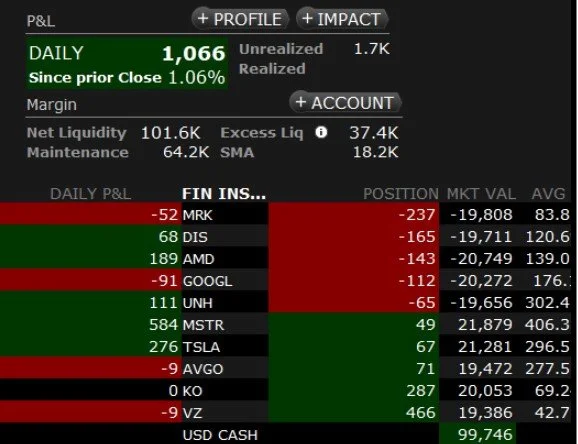

Matt and Jason’s new program is called Quant Science. I’d say this program is for people who already know their way around Python and have a basic understanding of the markets. The day you sign up, you’re getting access to the Omega private repository and following their tutorial on getting everything set up with Interactive Brokers and to use Omega, and you’ll want to be ready to rock.

If you’ve been following my work, you know I love sharing about what I’m learning and building in public. These articles double as my personal notes for later on when people ask me questions about my experience. This is an affiliate article, but the views and opinions are my honest thoughts on the course. The affiliate link gives you $1,000 off the Quant Science program. At the time of writing this article, the discount is not available anywhere else.

Here, we’ll go through:

What I’ve tried before in trading

What we’ve covered so far in the course (I’m halfway through)

My honest review of the Quant Science Program

How it compares to Business Science Courses

What’s next

What I Tried Before

Before this course, I had dipped my toes into trading with:

2007 - started watching Jim Cramer, did poorly during the housing bubble in 2008, like everyone else, and stepped away.

Got on the Robinhood waiting list in 2013. Picked up a share here and there, maybe only played with $3k. No advanced charting, and you’re dealing with slippage.

In 2000, tried learning technical analysis by reading books. I always felt like I could squint at the chart and find multiple different conclusions, even more so if I considered different time periods.

2022, I tried a triangular arbitrage strategy using R and crypto. Lost a little money due to liquidity issues (I was using small exchanges with lots of coin options).

Somewhere in that history, I also tried learning FOREX.

So I’m not new, but I’m also not seasoned; I’ve been a bit all over the place. For the course, I wish I had a better recollection of what I had learned over the years. I’m currently reading books and leaning on ChatGPT to look up terms, but I appreciate being challenged, and there are plenty of resources available for a quick refresher on the finance side. Personally, I’m glad that we’re diving right in.

In all of my previous attempts, I had never tried backtesting. I'd watched a couple of YouTube videos on the topic, but I wasn’t even sure what I wanted to tweak and test, and the number of library and data options was daunting.

What We’ve Covered So Far in the Course

The Quant Science program currently consists of two different courses (Level 1 and Level 2). As they’ve just launched some new libraries they’ve built to create the Level 2 course. They’re still building this out and bringing everyone along for the ride. At the moment, I’ve just finished Level 1. I’ll be sure to write another article as I journey into Level 2. This is a live program, but you also have access to recordings in case you miss a class or need to review material.

The course starts by having you get set up with Interactive Brokers. IB was their choice because it has robust API access, low latency, fast execution, and they haven’t had problems with people outside the US being able to leverage it. Once you have your Interactive Brokers trading account set up, in the first live clinic, you’ll get three strategies, and you'll start paper trading with those.

Level 1:

3 Core Strategies

Momentum Strategy

Focuses on buying assets that have been trending upward, under the assumption that winners keep winning (at least in the short term). Matt often refers to momentum being the real winner for him. We should get his actual full setup in Level 2.Risk Parity Strategy

Instead of allocating capital equally, this approach balances risk across asset classes.Crack Spread Strategy

A classic commodities arbitrage trade involving crude oil and its refined products (like gasoline and heating oil). It's a great example of a relative value strategy and a taste of institutional-style trading logic.

For example, with the momentum strategy, the provided code downloads a substantial amount of data using Jason and Matt’s proprietary library, “Omega”, scans stocks based on factors such as market capitalization and average volume, calculates the momentum factor, and then executes trades all within one notebook. Level 1 has mainly been provided with notebooks for the lectures, and Level 2 will be provided with scripts. When it’s time to rebalance, the notebook looks at what you’re already holding, divests appropriately, and picks up new stocks based on the momentum calculation. This provides you with the building blocks to combine strategies or use them manually as is.

If you want to start modifying and optimizing strategies, you’ll need to backtest. They cover two methods for backtesting, discussing the pros and cons of two different libraries/methods, and when it makes sense to use them.

Backtesting

In the 2nd Level 1 live clinic, they covered:

Zipline – An event-based backtester originally developed by Quantopian. This was used for both the momentum and risk parity strategies.

VectorBT – A faster and more flexible factor-based backtester, especially suitable for custom metrics and portfolio optimization. Below is a picture from the backtest of the crack spread strategy.

Risk Management and Analysis

In the 3rd Level 1 class, we examined the analysis of the backtest results using the following libraries, and Jason highlighted the key metrics to consider when evaluating different strategies.

Pyfolio – To dig into performance analytics for event-based strategies. The example given was with the risk parity strategy.

Alphalens – For factor-based analysis: How predictive is the signal of a factor? We were given a notebook using alphalens for the momentum strategy.

The exposure to both platforms (Zipline and vectorbt) was helpful; they each have their strengths, and understanding both lets you pick the right tool for the job. I might make a couple videos about how to analyze the plots that come out of pyfolio and alphalens, since I know it’s something I would’ve like to watch myself.

The last 2 live classes were around more advanced backtesting and evaluating an xgboost strategy. I was on vacation during these classes and still need to take a deeper look at these. I’m really just documenting my experience as I go along, and this is as far as I’ve gotten right now.

How It Compares to Business Science Courses

I’ve also taken courses from Business Science (which I loved), but Quant Science is quite different. The thing with live courses is that once you sign up, you have to wait a couple of weeks to start. Quant Science hops right into it. In the first lecture, you’re looking at different strategies. In a Business Science course, it’s very project-based, but you’re covering the building blocks much more slowly. I love both, but the prerequisites and how the courses are run is different. The Quant Science Discord for students is quite active, and all of the questions are answered pretty quickly by Jason and Matt.

Quant Science assumes a higher comfort level with code, I’m probably troubleshooting things outside the course and testing things independently more than I would if I were following along with a Python course.

What’s Next:

I’ve come away with reusable templates and new frameworks for thinking about strategy evaluation, and I’m now set up to keep iterating. I’ll be starting Level 2 soon. In Level 2, it’s much about about getting to a full production system utilizing tools like Luigi, MLFlow, and prefect.

Currently, I’ve placed a ton of FMP data in a duckdb database (they give you a discount on a data subscription, but that’s a little over $30/month), and I’m ready to get started with Level 2. I literally just downloaded the data for Level 2, I haven’t looked at it yet.

I’m still paper trading. My results? I haven’t really had a chance to see any yet. I’ve been rebalancing and switching strategies so much that I wouldn’t really expect any positive results. I plan to keep sharing as I go along, so definitely join me on this journey. I’m posting a picture of my paper trading account so I can track and share everything.

If you’re interested in joining, I look forward to seeing you in the Discord. After the course ends, previous cohorts stay in the Discord for support, community, and to receive the latest updates. I’m pumped so far. Again, this link gives you $1,000 off of the price of the course and is not yet offered anywhere else: Quant Science Program

If you’re interested in following my progress through the course or my results as I work through it, sign up for my email list. The only emails you’ll receive will be related to algorithmic trading.

Thank you so much for reading! Let me know if this was helpful, you can always find me on LinkedIn.

The content in this article is for informational and educational purposes only. It is not intended as financial, investment, or trading advice. All strategies and opinions expressed are those of the author and do not constitute recommendations to buy, sell, or hold any financial instruments. Trading and investing involve risk, and you should conduct your own research or consult a qualified financial advisor before making any investment decisions. ** Hypothetical or simulated performance results have inherent limitations and do not represent actual trading. No representation is being made that any account will or is likely to achieve profits or losses similar to those shown. Quant Science is not a registered investment adviser or commodity trading advisor, and nothing herein should be construed as personalized advice. **

Creating Your Own Chatbot: A Beginner-Friendly Tutorial with OpenAI, LangChain, Gradio, and Wikipedia

If you’re like me, you’ve been hearing a ton about LangChain and OpenAI. I was curious to see how difficult it would be to build one of these chatbots myself. It’s been my experience historically that if I just dig in, I find that topics are often more approachable than I assume, especially since the python libraries coming out are quite high-level. I was also super interested in learning what langchain’s function is, since I’d been hearing so much about it. In this beginner-friendly tutorial, I’ll guide you through the process of creating your own chatbot using Python and leveraging powerful tools such as OpenAI, LangChain, Gradio, and Wikipedia. Even if you’re new to Python or have never built a chatbot before, fear not — we’ll take it step by step. Let’s get started on your chatbot development adventure!

We’ll cover a couple of things:

About the app we’re building

What is LangChain?

Tutorial

The App We’re Building:

Here we’re going to build a quick Gradio app that will allow us to leverage OpenAI’s GPT-3.5 to enter a question, get a response returned, and we’re able to customize the behavior of our chatbot by modifying different parameters. I was super impressed with how easy it was to create a Gradio web app with a couple lines of code. Of course, this is a “hello world” level example, but still so cool.

The parameters that we’re able to configure are temperature and model_name. The temperature when set to zero gives us a very deterministic response, as the value gets larger, the response that is give is more random a temperature between 0.7 and 0.9 is often used for creative tasks, although the higher you set the number, the more you might need to worry about hallucinations.

This is a picture of the finished web app:

What is LangChain?:

I’ve learned that LangChain is super cool, no wonder why everyone is talking about it. Basically, if you ask a complex question, you’ll leverage a model (potentially multiple models), and a number of “tools” to get to your answer. LangChain is the library that decides what you need and in what order and then puts all the pieces together to get your answer. “Justin Beiber’s age times 4” might require that LangChain goes to wikipedia to get the birthdate if the answer isn’t in the LLM training data, and then go to a math tool to multiply the number by 4. Wikipedia and the math tool in this case are not part of LangChain, but LangChain will decide what it needs to leverage and in what order, and then execute.

App building tutorial:

For this I suggest opening my Google Colab. All you’d need to do here is enter your own API key and run the cells, then you’d have a working starter app. To get an API key, you’d go to OpenAI.

First we pip install our packages, import a set of libraries, and set our API key.

%pip install langchain openai wikipedia gradio

# Importing necessary dependencies

import os # used for working with environment variables, so we can store our API key

from langchain.chat_models import ChatOpenAI

from langchain.memory import ConversationBufferMemory

from langchain.agents import (

load_tools,

initialize_agent,

AgentType # "Agents use an LLM to determine which actions to take and in what order" - LangChain docs

)

import langchain

import gradio as gr

langchain.debug = True # verbose thought process logs as it runs

# Set the value of the 'OPENAI_API_KEY' environment variable. You get $5 free when you sign up

os.environ['OPENAI_API_KEY'] = '[YOUR API KEY HERE]'Hello, World!

Now that we have our libraries, we’re ready to start building our chatbot.

First, we instantiate the “ChatOpenAI” class, establishing a connection to the ChatGPT language model. By specifying a temperature value of 0 and model name as “gpt-3.5-turbo,” we configure the behavior of the language model. You could use a different model or a higher value of temperature. The “verbose=True” setting enables detailed logging to provide insights into the chatbot’s thought process.

Next, we load the necessary tools, including the “wikipedia” module, using the “load_tools” function. This step connects our chatbot to all of the information available in Wikipedia. The LangChain functions allow seamless integration with ChatGPT and determine if and when the Wikipedia tool is needed during conversations.

To enable memory and maintain conversation history, we instantiate the “ConversationBufferMemory” class. By specifying a memory key as “chat_history” and setting “return_messages=True,” we ensure that the chatbot retains the context of previous interactions.

Finally, we initialize the agent for conversation using the loaded tools. The “initialize_agent” function takes in the tools, the ChatGPT language model, and specifies the agent type as “CHAT_CONVERSATIONAL_REACT_DESCRIPTION.” This agent facilitates interactive and responsive conversations while providing detailed logging with “verbose=True.” The “handle_parsing_errors” message assists in error checking, and the memory component allows the chatbot to maintain coherence throughout the conversation.

With these code snippets, our chatbot is now equipped with the necessary connections, tools, memory, and agent initialization to engage in captivating and intelligent conversations.

# Creating an instance of the ChatOpenAI class for conversation - this is the connection to ChatGPT

chat = ChatOpenAI(temperature=2.5, model_name="gpt-3.5-turbo", verbose=True)

# This is a connection to Wikipedia's data - LangChain functions, It'll start with ChatGPT and then determine if it needs the Wikipedia tool.

tools = load_tools(["wikipedia"], llm=chat)

# This was just copied from the docs, but we need it to have memory.

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

# Initializing the agent for conversation using the loaded tools - Give it the tools, the LLM,

# and the GPT connection.

agent = initialize_agent(

tools,

chat,

agent=AgentType.CHAT_CONVERSATIONAL_REACT_DESCRIPTION,

verbose=True,

handle_parsing_errors="Check your output and make sure it conforms!",

memory=memory

)This next line of code defines a function called “call_agent” that when invoked, enables users to interact with the chatbot by posing questions or providing inputs. The main reason for needing the “call_agent” function is that gradio will automatically pass the input as a parameter to our function, and the agent.run call uses a kwarg. The “call_agent” function utilizes the “agent.run()” method, which triggers the agent to process the user’s question. The agent leverages the tools, language model, and memory components to generate an appropriate response based on the input. With this function, the chatbot becomes fully operational, yay!

# The chatbot is ready now and you can ask it questions. This function is used to call the agent and get a response based on the question asked

def call_agent(user_question):

response = agent.run(input=user_question)

return responseNext we need our web app. Gradio allows us to design and launch an interactive interface that facilitates seamless communication between users and the chatbot.

Inside the with gr.Blocks() as demo context, we define the components of our Gradio interface. We begin by creating a title using the gr.HTML function, then we set up a gr.Textbox component. This textbox serves as the interface where users can enter their questions or queries for the chatbot.

For displaying the chatbot’s responses, we create another gr.Textbox. This textbox will show the chatbot’s generated responses to the user’s input.

To trigger the chatbot’s response generation, we include a gr.Button. When the user clicks this button, it calls the call_agent function we defined earlier, passing the user’s input from the input textbox and displaying the chatbot’s response in the output textbox.

Finally, we launch the Gradio interface using demo.launch(). By setting share=True, we allow others to access and interact with the chatbot through a shareable link. The debug=True option enables verbose logs for troubleshooting during the development process.

# Creating a Gradio interface for the chatbot

with gr.Blocks() as demo:

title = gr.HTML("<h1>The Data Moves Me Chatbot</h1>")

input = gr.Textbox(label="What would you like to know?") # Textbox for user input

output = gr.Textbox(label="Here ya go, Champ:") # Textbox for chatbot response

btn = gr.Button("Gimme the answer") # Button to trigger the agent call

btn.click(fn=call_agent, inputs=input, outputs=output)

# Launching the Gradio interface

demo.launch(share=True, debug=True)Summary:

Hopefully this gave you a working app and some context about how all of these pieces work together. I was pleasantly surprised with how intuitive the openai and langchain libraries were for getting started (I haven’t gotten much further than this very introductory example though) and how easy it was to stand up a gradio app.

If you've tried Coursera or other MOOCs to learn python and you're still looking for the course that'll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I would recommend: Python Course.

By starting with chatbot development, we now have the potential to build intelligent virtual assistants, customer support bots, or interactive information providers. The possibilities are limitless if you continue to expand and enhance your chatbot's capabilities. Please let me know if you take this beginning and do something neat with it, I’d love to hear from you. Happy coding!

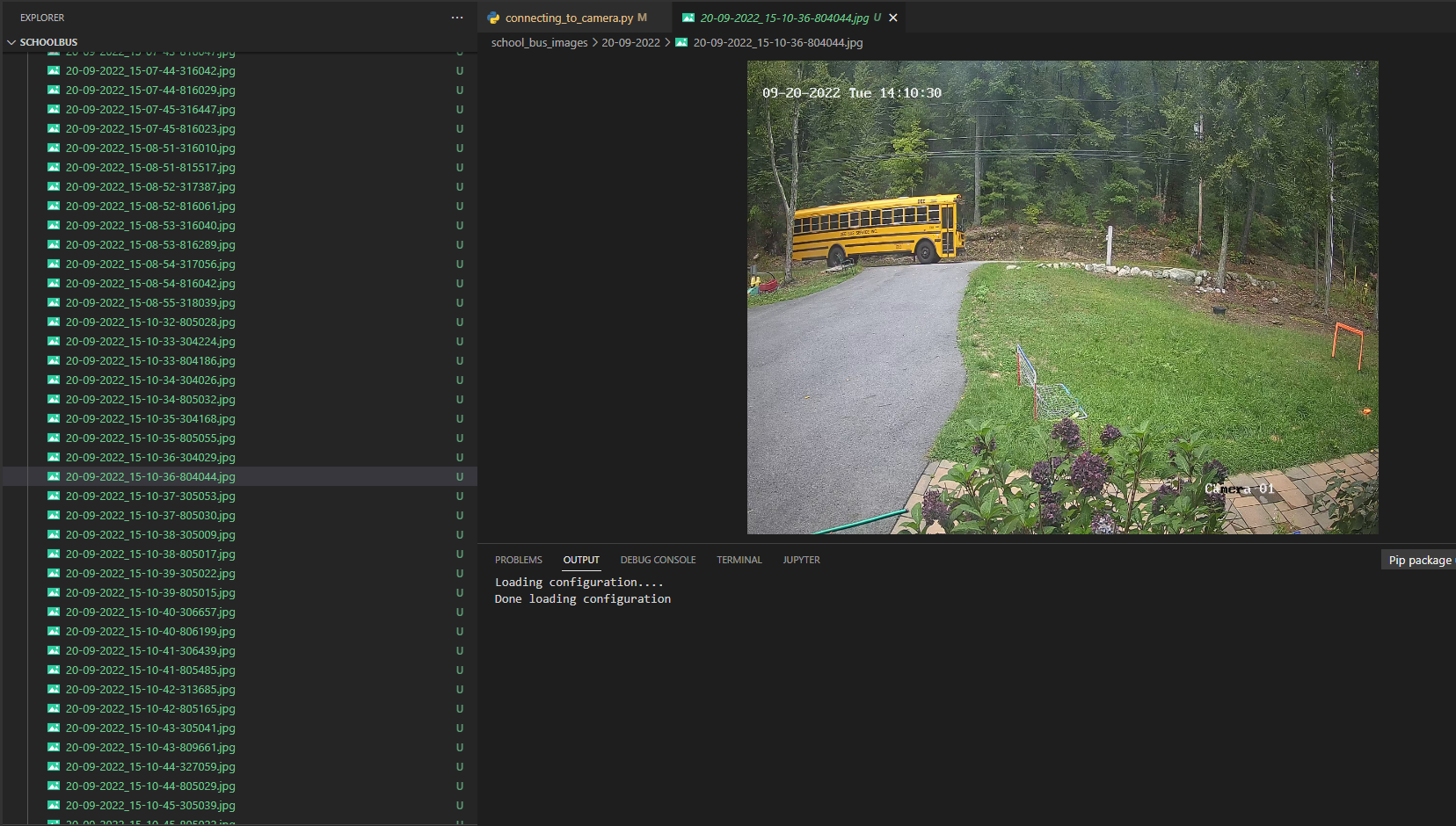

Object Detection Using YOLOv5 Tutorial – Part 3

Very exciting, you're made it to the 3rd and final part of bus detection with YOLOv5 tutorial. If you haven't been following along this whole time; you can read about the camera setup, data collection and annotation process here. The 2nd part of the tutorial focused on getting the data out of Roboflow, creating a CometML data artifact and training the model. That 2rd article is here.

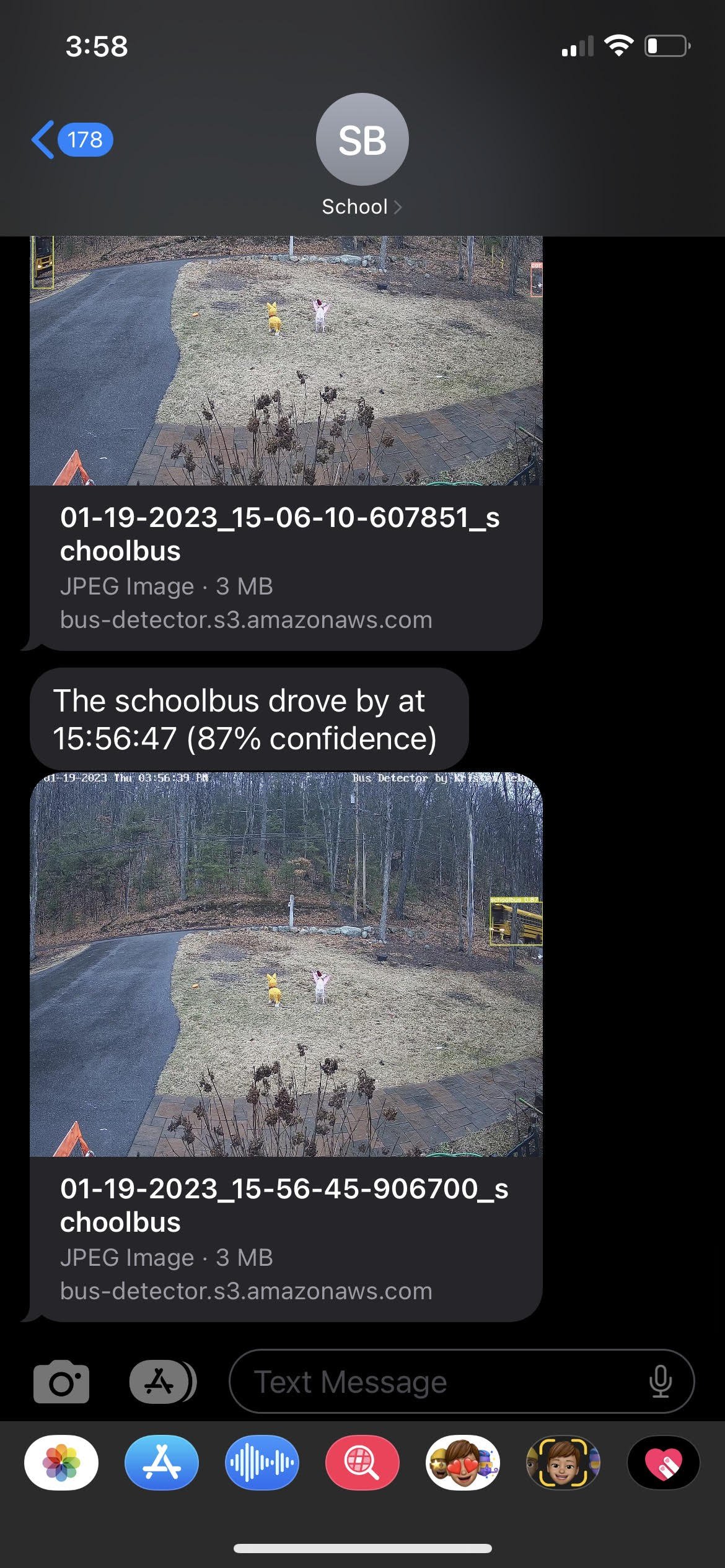

In this article, we're going to take the trained model and actually start doing live detection. Once we detect the bus we'll recieve a text. Here's the steps we're going to go through to set that up:

Choose the best training run

Run live detection

Send a text using AWS

Before we get started, if you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I used: Python Course.

Choosing the best training run:

Here we're obviously going to be using Comet. For this project, there were a couple considerations. When I started with too few images there was more value in picking the right model, currently it looks like any of my models trained on the larger set of images would work just fine. Basically, I wanted to minimize false positives, I definitely did not want a text when a neighbor was driving by, because these texts are going to my phone everyday, and that would be annoying. Similarly, it's not a big deal if my model misses classifying a couple frames of the bus, as long as it's catching the bus driving past my house consistently (which consists of a number of frames while driving by my house). We want our precision to be very close to 1.

Run live detection:

It's showtime, folks! For the detection, I forked the YOLOv5 detect script. A link to my copy is here. I need to be able to run my own python code each time the model detects the schoolbus. There are many convenient output formats provided by the yolov5 detect.py script, but for this project I decided to add an additional parameter to the script called "on_objects_detected". This parameter is a reference to a function, and I altered detect.py to call the function whenever it detects objects in the stream. When it calls the function, it also provides a list of detected objects and the annotated image. With this in place, I can define my own function which sends a text message alert and pass that function name to the yolov5 detect script in order to connect the model to my AWS notification code. You can 'CRTL + F' my name 'Kristen' to see the places where I added lines of code and comments.

Sending a text alert:

This was actually my first time using AWS, I had to set up a new account. This Medium article explains how you can set up an AWS account (but not the Go SDK part, I know nothing about Go), but I then used the boto3 library to send the sms.

import os

os.environ['AWS_SHARED_CREDENTIALS_FILE'] = '.aws_credentials'

import boto3

def test_aws_access() -> bool:

"""

We only try to use aws on detection, so I call this on startup of detect_bus.py to make sure credentials

are working and everything. I got sick of having the AWS code fail hours after starting up detect_bus.py...

I googled how to check if boto3 is authenticated, and found this:

https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/sts.html#STS.Client.get_caller_identity

"""

try:

resp = boto3.client('sts').get_caller_identity()

print(f'AWS credentials working.')

return True

except Exception as e:

print(f'Failed to validate AWS authentication: {e}')

return False

def send_sms(msg):

boto3.client('sns').publish(

TopicArn='arn:aws:sns:us-east-1:916437080264:detect_bus',

Message=msg,

Subject='bus detector',

MessageStructure='string')

def save_file(file_path, content_type='image/jpeg'):

"""Save a file to our s3 bucket (file storage in AWS) because we wanted to include an image in the text"""

client = boto3.client('s3')

client.upload_file(file_path, 'bus-detector', file_path,

ExtraArgs={'ACL': 'public-read', 'ContentType': content_type})

return f'https://bus-detector.s3.amazonaws.com/{file_path}'Since we're passing the photo here, you'll get to see the detected picture in the text you receive (below). I went out of my way to add this because I wanted to see what was detected. If it was not a picture of a bus for some reason, I'd like to know what it was actually detecting. Having this information could help inform what type of training data I should add if it wasn't working well.

I also added logic so that I was only notified of the bus once every minute, I certainly don't need a text for each frame of the bus in front of my house. Luckily, it's been working very well. I haven't missed a bus. I have had a couple false positives, but they haven't been in the morning and it's a rare issue.

In order to be allowed to send text messages through AWS SNS in the US, I'm required to have a toll-free number which is registered and verified (AWS docs). Luckily, AWS can provide me with a toll-free number of my own for $2/month. I then used the AWS console to complete the simple TFN registration process where I described the bus detector application and how only my family would be receiving messages from the number (AWS wants to make sure you're not spamming phone numbers).

Getting a .csv of the data:

Although this wasn't part of the intended use case, I'd like to put the bus data over time into a .csv so that I could make a dashboard (really I'm thinking about future projects here, it's not necessary for this project). I've started looking at plots of my data to understand the average time that the bus comes for each of it's passes by my house. I'm starting to see how I could potentially use computer vision and text alerts for other use cases where this data might be more relevant.

import pytz

import boto3

import os

os.environ['AWS_SHARED_CREDENTIALS_FILE'] = '.aws_credentials'

resp = boto3.client('s3').list_objects_v2(

Bucket='bus-detector',

Prefix='images/'

)

def get_row_from_s3_img(img):

local = img['LastModified'].astimezone(pytz.timezone('America/New_York'))

return {

'timestamp': local.isoformat(),

'img_url': f'https://bus-detector.s3.amazonaws.com/{img["Key"]}',

'class': img['Key'].split('_')[-1].split('.')[0]

}

images = resp['Contents']

images.sort(reverse=True, key=lambda e: e['LastModified'])

rows = list(map(get_row_from_s3_img, images))

lines = ['timestamp,image_url,class']

for row in rows:

lines.append(f'{row["timestamp"]},{row["img_url"]},{row["class"]}')

file = open('data.csv', 'w')

file.write('n'.join(lines) + 'n')Summary:

Well that's it folks. You've been with me on a journey through my computer vision project to detect the school bus. I hope something here can be applied in your own project. In this article we actually ran the detection and set up text alerts, super cool. Through going through this exercise, I can see a number of other ways I could make my life easier using similar technology. Again, the first article about camera setup, data collection, and annotation in Roboflow is here. The 2nd part of the tutorial focused on downloading the data, creating a CometML data artifact and training the model here.

If you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I would recommend: Python Course.

Object Detection Using YOLOv5 Tutorial

Welcome! I’ve written this overview of my computer vision project to detect the school bus passing my house. This is for the person who wants to start playing with computer vision and wants to see a project from end-to-end. In this article I’ll start with explaining the problem I’m trying to solve, mention the camera I chose, show a quick opencv tutorial, create images and discuss the different python packages I'm using. The github repo to the project is here. This project will be covered as series of a couple blog posts to get through explaining the rest of the project, so be on the lookout for the next article! The next article will be a guest blog on Roboflow and I'll be sure to link it here.

In this article we’ll cover:

What I’m solving

The libraries I’m using

Setting up the camera

Creating images

Data collection process

The problem:

I wanted to set up a camera and use a computer vision model to detect the school bus when it is passing our house and alert me by sending a text message once the bus is detected. The school bus passes by our house, picks up someone else, and then turns around and then stops at the end of our driveway. This gives us a couple minutes to get my kids ready and out the door once alerted. And now I don’t have to wonder whether or not we’ve missed the bus go by.

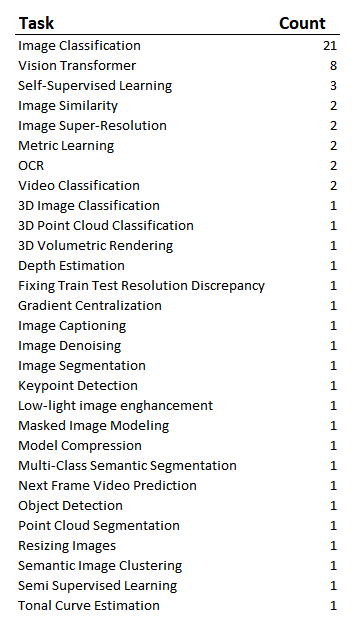

The Libraries:

For this project I'm using a number of libraries. Here's a high-level overview of what we'll be working with throughout the project:

yolov5 - This is the object detection model where we will custom train a yolov5 model on our own data. From their repo readme: "YOLOv5 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development."

Roboflow - Loved this GUI for annotating and augmenting the image data that is then be used to train our yolov5 model. Roboflow is an end-to-end CV platform and a library which also provides a Python SDK.

CometML – Comet allows you to basically take a snapshot of your code, dependencies, and anything else needed for your work to be reproducible. With one function you can compare all of your training runs very easily, it’ll even push your runs up to Github for you.

opencv - We're using opencv to access the camera.

Before we get started, if you’ve tried Coursera or other MOOCs to learn python and you’re still looking for the course that’ll take you much further, like working in VS Code, setting up your environment, and learning through realistic projects.. this is the course I used: Python Course.

Setting up the camera:

I wanted to share about the actual camera I used because finding an appropriate camera wasn’t overly intuitive. I knew that I'd need a friendly api that would work with opencv in python and there was a bit of research before I felt confident the camera would work for my purposes. I went with the ANNKE C500 5MP PoE IP Turret Security Camera it was $60.

opencv can connect to an RTSP compliant security camera (RTSP stands for Real Time Streaming Protocol.. Real time is what we want, baby!). Once we have that setup, we’ll start thinking about collecting our data and then annotating that data using Roboflow.

The instructions for setting up this particular camera were pretty straightforward, I just followed the camera's "getting started" instructions.

Then enter your RTSP address from the camera as your network URL.

Keep that URL handy, you’ll be using that in a couple of places during this project.

The very first step I took once having my camera setup was just to look at the example code in the opencv documentation (cv2) to make sure that things were running. So basically we're going to use VLC to see ourselves on camera and just check that it's all actually working. This is taken directly from the opencv documentation and I only changed the first line of code so that it would use my RTSP URL. I don't have the following code in the repo because it's really just testing and not part of the project.

import numpy as np

import cv2 as cv

cap = cv.VideoCapture("my RTSP URL")

if not cap.isOpened():

print("Cannot open camera")

exit()

while True:

# Capture frame-by-frame

ret, frame = cap.read()

# if frame is read correctly ret is True

if not ret:

print("Can't receive frame (stream end?). Exiting ...")

break

# Our operations on the frame come here

gray = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

# Display the resulting frame

cv.imshow('frame', gray)

if cv.waitKey(1) == ord('q'):

break

# When everything done, release the capture

cap.release()

cv.destroyAllWindows()After I got to see myself on camera, the next thing I did was set up handling the credentials. This was just to keep my credentials out of version control by saving them in the .camera_credentials file which is excluded from version control with .gitignore. This script takes my credentials and creates the RTSP URL from them. When you call 'detect' in yolov5, the way you do that is by giving it the RTSP URL, so I define a function here called get_rtsp_url().

from os.path import exists

import cv2

def get_rtsp_url():

# we get the IP address of the camera from our router software

camera_address = "192.168.4.81"

# This path and port is documented in our security camera's user manual

rtsp_path = "/H264/ch1/main/av_stream"

rtsp_port = 554

# The name of a file which we will exclude from version control, and save our username and password in it.

creds_file = '.camera_credentials'

if not exists(creds_file):

raise f'Missing configuration file: {creds_file}'

# This variable will hold the username and password used to connect to

# the security camera. Will look like: "username:password"

camera_auth = open(creds_file, 'r').read().strip() # open() is how you can read/write files

# return the open cv object with the authenticated RTSP address.

full_url = f'rtsp://{camera_auth}@{camera_address}:{rtsp_port}{rtsp_path}'

return full_urlNext is the connecting_to_camera.py. In a while loop, we're asking for the next frame from the camera and then we save it to the images directory. I also have this create a new directory for each day and for each hour. This made it easier to find and keep track of images easier for me. This is adapted from the opencv documentation.

import numpy as np

import cv2 as cv

from camera import connect_camera

from datetime import datetime

import time

from pathlib import Path

import os

cap = connect_camera()

if not cap.isOpened():

exit()

img_dir = Path('./school_bus_images/')

while True:

ret, frame = cap.read()

if not ret:

print("Can't receive frame (stream end?). Exiting ...")

break

### resizing so it won't be so huge

frame = cv.resize(frame, (int(frame.shape[1] * .5), int(frame.shape[0] * .5)))

now = datetime.now()

filename = now.strftime("%m-%d-%Y_%H-%M-%S-%f") + ".jpg"

day = now.strftime("%m-%d-%Y")

hour = now.strftime("%H")

filepath = img_dir / day / hour / filename

if not (img_dir / day).exists():

os.mkdir(img_dir / day)

if not (img_dir / day / hour).exists():

os.mkdir(img_dir / day / hour)

cv.imwrite(str(filepath), frame)

#cv.imshow('frame', frame)

time.sleep(0.1)

# When everything done, release the capture

cap.release()

cv.destroyAllWindows()Data Collection:

These images will work great because they're of the actual scenery and object I'm looking to detect. Originally I had taken a video of the school bus pass my house on my phone. I then used a short script in R to turn that video into images. Live and learn, the frames from the actual camera are much more effective. There were many other things I learned during the data collection process as well. This is really my first time working with image data. I had tried using photos of buses from the internet, but this introduced orientations, colors and other things that I didn't need. My front yard will always look the same (with the exception of snow and being darker in the winter), so the buses from the internet didn't make sense for the project. If I was to extend this project to other use cases I absolutely might think about leveraging images from the internet or using more data augmentation. I made sure to include plenty of partial busses and images of the bus going both directions past my house.

The model also detects cars and people quite well, but it was only trained on data that happened to be in front of my camera. I also annotated some bicycles, but that class isn't working well in my model at all.

I wish I had thought about how I planned on organizing my images from the beginning. Between using video from my phone, to leveraging images from the internet, different file formats being required for different algorithms (I started with yolov3 and then tried a classification algorithm before going with yolov5, they all required different file format and structure), I ended up with a lot of different data folders that I did not manage well. Then when I put the project down for the summer because school was out, when I came back in the fall I was really confused as to where my most recent images were. This is when I decided I was going to set up a Comet artifact to help manage my data, but we'll talk more about that in a future article.

Wonderful! We've talked about my considerations when getting images and hopefully your camera is now up and running. The next step is to annotate the images. I personally set up a free account in Roboflow, the UI was super intuitive (as you can see below) and I was able to annotate my images very quickly. You just click on the big "+ Create New Project" button, give the project a name and select "object detection" from the "Product Type" menu, on the next page select "upload" from the left nav and then you can upload your images.

Summary:

In this article we set ourselves up to begin a computer vision project. Although I’d love to keep this article going, I’m going to be breaking this project up into digestible chunks. We looked at the different libraries we’ll be using throughout the project, set up our camera, created images, and got ourselves set up to annotate all of those images. Next, we'll be talking about getting the data out of Roboflow, creating a data artifact in Comet, and training a yolov5 model. Since I'm chatting about Roboflow, the next article is actually going to be a guest blog on the Roboflow site. I'll make sure that all the articles are easy to navigate and find. After that we’ll choose the best model from the training runs by looking at the different experiments in Comet and use this to detect the bus live while watching the output live. Finally, we’ll be configuring how to send out text alerts using AWS. Lots of fun pieces in this project. It sounds like a lot, but I hope this feels like a project you’ll be able to do on your own! Stay tuned for the rest of the series. Click here to read the next article in the series.

Concatenating and Splitting Strings in R

Welcome! Here we're going to go through a couple examples of concatenating, subsetting and splitting strings in R. The motivation for this article is to show how simple working with strings can be with stringr. Working with strings was one of the areas that seemed intimidating and kept me from moving from Excel to R sooner, but many of the things I needed to do in Excel with strings are quite simple in R.

This article is really focusing on examples that I've experienced in Excel and how to do them in R. An example of when I've had to concatenate in the past is when someone handed me a dataset that included people's names and phone numbers, but they had not included a column with an id. I concatenated names and phone numbers to create a unique id for users. That's probably something you're not supposed to do (and I'd only recommend for an ad-hoc analysis without a ton of data), but it worked well enough for this particular use case. Using the "left" and "right" functions in Excel were also pretty common for me, and again, this is very easy to do in R. In this article we're going to cover:

Concatenating strings

Subsetting strings

Splitting strings

To do these string manipulations, we're going to be using the stringr and tidyr libraries. The cheat sheet for the stringr library can be found here. The tidyr cheat sheet can be found here. My friend Yujian Tang will be doing an almost similar article in python. You can find Yujian's article here.

Concatenate a string in r:

Concatenating is a fancy terms for just chaining things together. Being able to manipulate strings is one of the skills that made me feel more comfortable moving away from Excel and towards using code for my analyses. Here, we're just going to call in the stringr, dplyr, and tidyr libraries, create some data, and then concatenate that data. I've chosen to add the code here in a way that is easy to copy and paste, and then I've also added a screenshot of the output.

### install and call the stringr library

#install.packages("stringr")

#install.packages("dplyr")

#install.packages("tidyr")

library(stringr)

library(dplyr)

library(tidyr) # for the separate function in splitting strings section

#### Create data

column1 <- c("Paul", "Kristen", "Susan", "Harold")

column2 <- c("Kehrer", "Kehrer", "Kehrer", "Kehrer")

##concatenate the columns

str_c(column1, column2)Super simple, but also rarely what we're actually looking to achieve. Most of the time I'll need some other formatting, like a space between the names. This is super easy and intuitive to do. You're also able to put multiple concatenations together using the "collapse" parameter and specify the characters between those.

## Put a space between the names

str_c(column1, " ", column2)

### If you were trying to make some weird sentence, I added apostrophes for the names:

str_c("We'll put the first name here: '", column1, "' and we'll put the second name here: '", column2,"'")

### Using the collapse parameter, you're also able to specify any characters between the concatenations. So column 1 and 2 will be concatenated,

### but each concatenation will be separated by commas

str_c(column1, " ", column2, collapse = ", ")NAs by default are ignored in this case, but if you'd like them to be included you can leverage the "str_replace_na" function. This might be helpful if you're doing further string manipulation later on and don't want all your data to be consistent for future manipulations.

### If you're dealing with NA's, you'll just need to add the "str_replace_na" function if you'd like it to be treated like your other data.

### Here is the default handling of NAs

column3 <- c("Software Engineer", "Data Scientist", "Student", NA)

str_c(column1, " - ", column3, ", ")

### To make this work with the NA, just add "str_replace_na" to the relevant column

str_c(column1, " - ", str_replace_na(column3))Subsetting a String in R:

Here, I was really focused on just sharing how to get the first couple elements or the last couple elements of a string. I remember in my Excel days that there would sometimes be a need to keep just the 5 characters on the right (or the left), especially if I received data where a couple columns had already been concatenated and now it needed to be undone. In R indexes start with "1", meaning the first object in a list is counted at "1" instead of "0". Most languages start with "0", including python. So here we go, looking at how you'll get the left and right characters from a string. First we'll get the original string returned, then we'll look at the right, then finally we'll do the same for the left.